共计 3942 个字符,预计需要花费 10 分钟才能阅读完成。

接上文Hadoop完全分布式环境搭建,本文介绍关于 Hadoop 的简单测试及使用

1、HDFS的简单使用测试

- 创建文件夹

在HDFS上创建一个文件夹/test/input

[hadoop@master ~]$ hadoop fs -mkdir -p /test/input- 查看创建的文件夹

[hadoop@master ~]$ hadoop fs -ls /

Found 1 items

drwxr-xr-x - hadoop supergroup 0 2018-12-12 17:58 /test- 向HDFS上传文件

创建一个文本文件words.txt

[hadoop@master ~]$ vim words.txt

[hadoop@master ~]$ hadoop fs -put words.txt /test/input[hadoop@master ~]$ hadoop fs -ls /test/input

Found 1 items

-rw-r--r-- 2 hadoop supergroup 35 2018-12-12 18:00 /test/input/words.txt- 从HDFS下载文件

将刚刚上传的文件下载到~/data文件夹中

[hadoop@master ~]$ hadoop fs -get /test/input/words.txt ~/data

[hadoop@master ~]$ ls data/

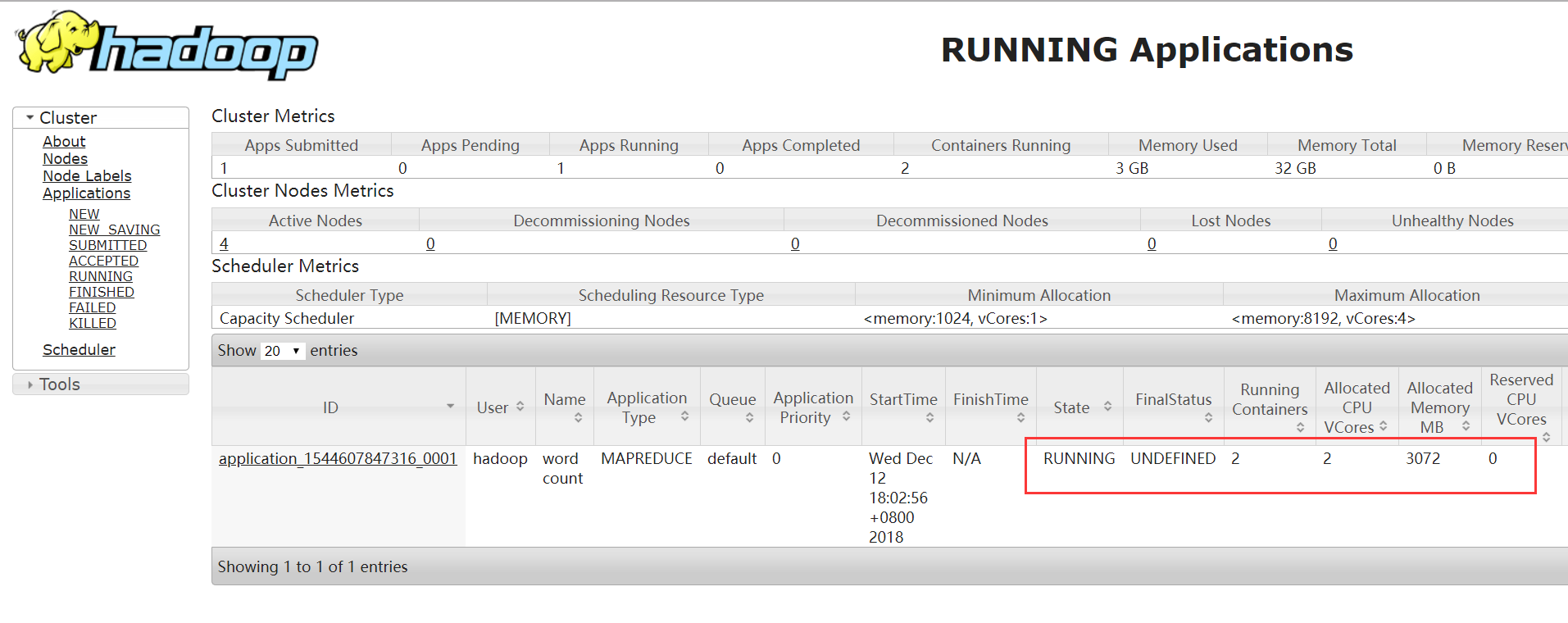

hadoopdata words.txt2、运行第一个Map Reduce的例子程序:wordcount

用自带的demo–wordcount来测试hadoop集群能不能正常跑任务:

执行wordcount程序,并将结果放入/test/output/文件夹:

[hadoop@master ~]$ hadoop jar ~/apps/hadoop-2.9.1/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.9.1.jar wordcount /test/input /test/output[hadoop@master ~]$ hadoop jar ~/apps/hadoop-2.9.1/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.9.1.jar wordcount /test/input /test/output

18/12/12 18:02:54 INFO client.RMProxy: Connecting to ResourceManager at slave3/172.20.2.110:8032

18/12/12 18:02:55 INFO input.FileInputFormat: Total input files to process : 1

18/12/12 18:02:56 INFO mapreduce.JobSubmitter: number of splits:1

18/12/12 18:02:56 INFO Configuration.deprecation: yarn.resourcemanager.system-metrics-publisher.enabled is deprecated. Instead, use yarn.system-metrics-publisher.enabled

18/12/12 18:02:57 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1544607847316_0001

18/12/12 18:02:57 INFO impl.YarnClientImpl: Submitted application application_1544607847316_0001

18/12/12 18:02:58 INFO mapreduce.Job: The url to track the job: http://slave3:8088/proxy/application_1544607847316_0001/

18/12/12 18:02:58 INFO mapreduce.Job: Running job: job_1544607847316_0001

18/12/12 18:03:09 INFO mapreduce.Job: Job job_1544607847316_0001 running in uber mode : false

18/12/12 18:03:09 INFO mapreduce.Job: map 0% reduce 0%

18/12/12 18:03:17 INFO mapreduce.Job: map 100% reduce 0%

18/12/12 18:03:24 INFO mapreduce.Job: map 100% reduce 100%

18/12/12 18:03:25 INFO mapreduce.Job: Job job_1544607847316_0001 completed successfully

18/12/12 18:03:25 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=53

FILE: Number of bytes written=395007

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=139

HDFS: Number of bytes written=31

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=5738

Total time spent by all reduces in occupied slots (ms)=4348

Total time spent by all map tasks (ms)=5738

Total time spent by all reduce tasks (ms)=4348

Total vcore-milliseconds taken by all map tasks=5738

Total vcore-milliseconds taken by all reduce tasks=4348

Total megabyte-milliseconds taken by all map tasks=5875712

Total megabyte-milliseconds taken by all reduce tasks=4452352

Map-Reduce Framework

Map input records=3

Map output records=6

Map output bytes=59

Map output materialized bytes=53

Input split bytes=104

Combine input records=6

Combine output records=4

Reduce input groups=4

Reduce shuffle bytes=53

Reduce input records=4

Reduce output records=4

Spilled Records=8

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=217

CPU time spent (ms)=1580

Physical memory (bytes) snapshot=498122752

Virtual memory (bytes) snapshot=4297453568

Total committed heap usage (bytes)=292028416

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=35

File Output Format Counters

Bytes Written=31查看执行结果:

[hadoop@master ~]$ hadoop fs -ls /test/output

Found 2 items

-rw-r--r-- 2 hadoop supergroup 0 2018-12-12 18:03 /test/output/_SUCCESS

-rw-r--r-- 2 hadoop supergroup 31 2018-12-12 18:03 /test/output/part-r-00000在output/part-r-00000可以看到程序执行结果:

[hadoop@master ~]$ hadoop fs -cat /test/output/part-r-00000

Ouer 1

hadoop 1

hello 3

root 1

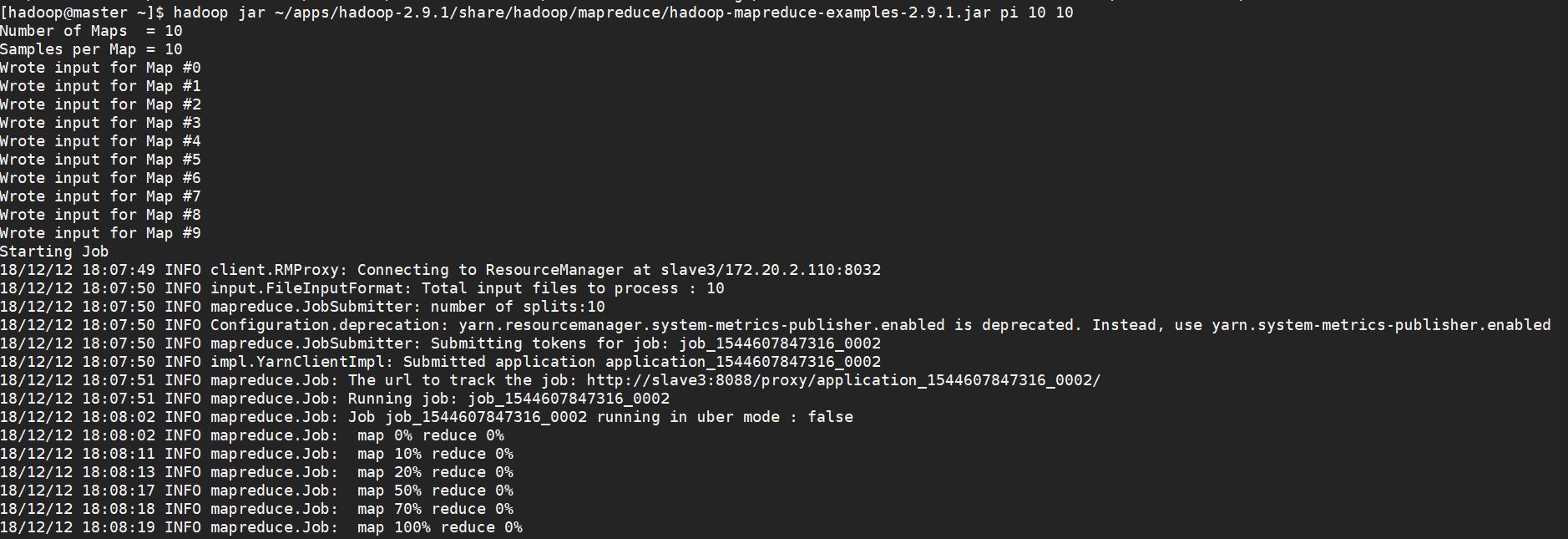

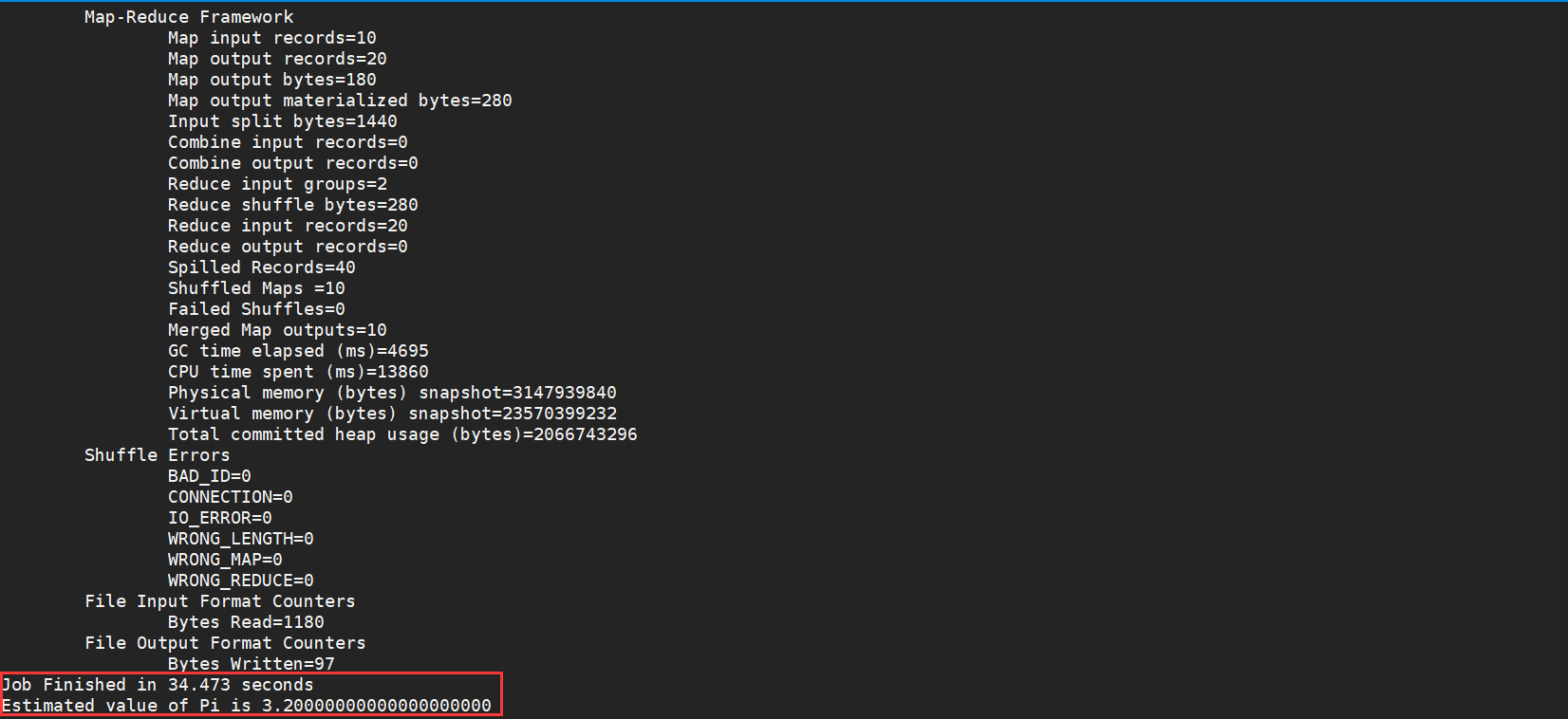

[hadoop@master ~]$3、运行例子程序:求圆周率

用自带的demo–pi来测试hadoop集群能不能正常跑任务:

执行pi程序:

[hadoop@master ~]$ hadoop jar ~/apps/hadoop-2.9.1/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.9.1.jar pi 10 10用来求圆周率,pi是类名,第一个10表示Map次数,第二个10表示随机生成点的次数(与计算原理有关)

正文完