共计 13862 个字符,预计需要花费 35 分钟才能阅读完成。

1 前言

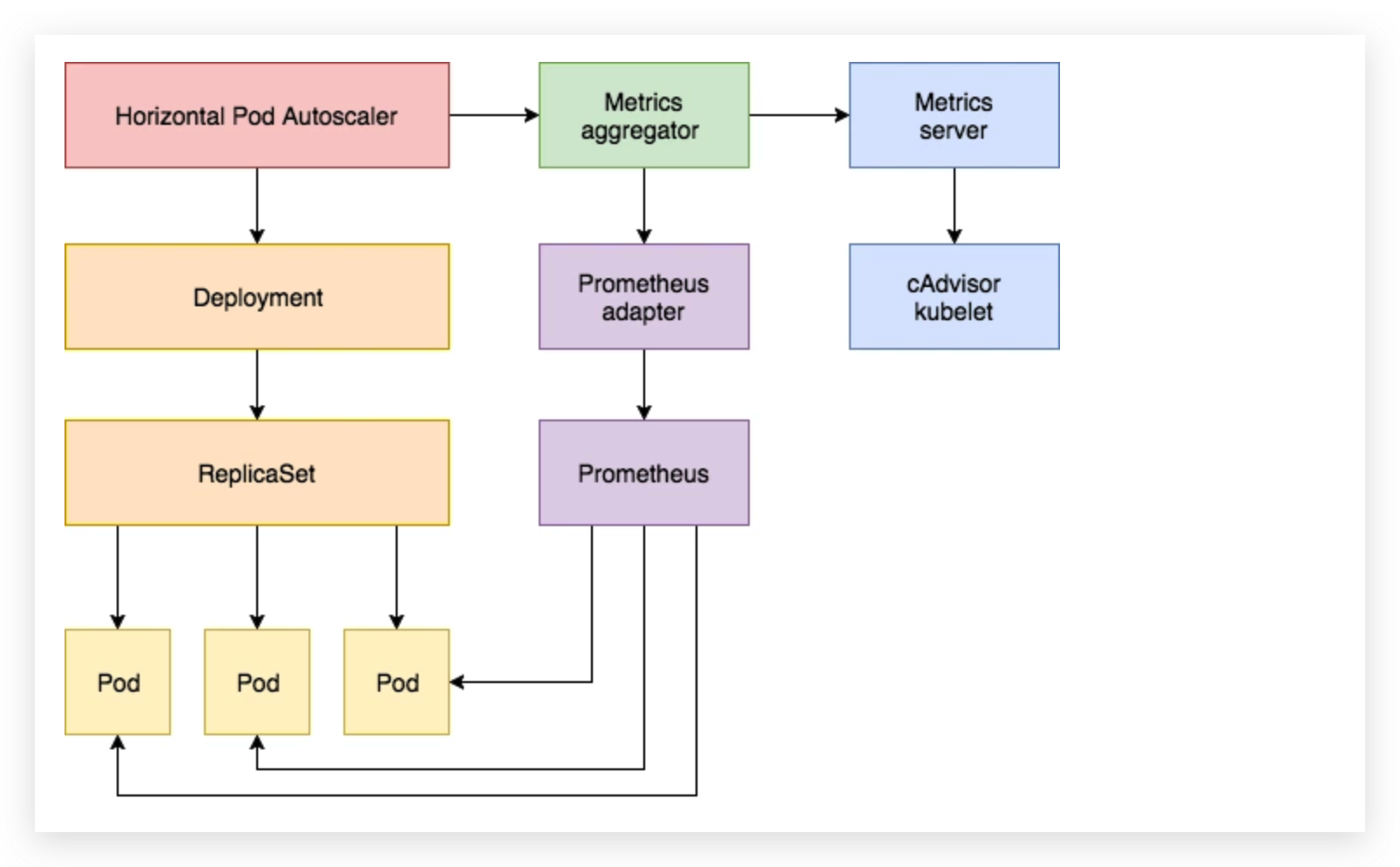

使用 Kubernetes 进行容器编排的主要优点之一是,它可以非常轻松地对我们的应用程序进行水平扩展。Pod 水平自动缩放(HPA)可以根据 CPU 和内存使用量来扩展应用,在更复杂的情况下,我们可能还需要基于内存或者基于某些自定义的指标来进行扩缩容。

Kubernetes APIserver 提供了三种 api 用于监控指标相关的操作:

resource metrics API:资源指标,被设计用来给K8S核心组件提供监控指标。custom metrics API: 自定义指标,被设计用来给 HPA 控制器提供指标。external metrics API: 外部指标,可能由上面的自定义指标适配器提供。

除了基于 CPU 和内存来进行自动扩缩容之外,我们还可以根据自定义的监控指标来进行。这个我们就需要使用 Prometheus Adapter,Prometheus 用于监控应用的负载和集群本身的各种指标,Prometheus Adapter 可以帮我们使用 Prometheus 收集的指标并使用它们来制定扩展策略,这些指标都是通过 APIServer 暴露的,而且 HPA 资源对象也可以很轻易的直接使用。

2 环境部署

资源文件已托管在Git中

git clone https://github.com/honest1y/k8s-prom.git && cd k8s-prom2.1 Prometheus

k8s-prom git:(main) ✗ ls

README.md demoyaml grafana prometheus prometheus-adapter➜ k8s-prom git:(main) ✗ kubectl create ns prom➜ k8s-prom git:(main) ✗ kubectl apply -f prometheus/➜ k8s-prom git:(main) ✗ k get po -n prom

NAME READY STATUS RESTARTS AGE

prometheus-69fd7945d8-5zkgc 1/1 Running 0 8s

➜ k8s-prom git:(main) ✗ k get svc -n prom

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

prometheus NodePort 172.17.255.10 <none> 9090:31456/TCP 34s访问任意节点的 31456 即可打开 Prometheus

2.2 demo

首先,我们部署一个示例应用,在该应用程序上测试 Prometheus 指标自动缩放,资源清单文件如下所示

apiVersion: apps/v1

kind: Deployment

metadata:

name: hpa-prom-demo

spec:

selector:

matchLabels:

app: nginx-server

template:

metadata:

labels:

app: nginx-server

spec:

containers:

- name: nginx-demo

image: cnych/nginx-vts:v1.0

resources:

limits:

cpu: 50m

requests:

cpu: 50m

ports:

- containerPort: 80

name: http

---

apiVersion: v1

kind: Service

metadata:

name: hpa-prom-demo

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "80"

prometheus.io/path: "/status/format/prometheus"

spec:

ports:

- port: 80

targetPort: 80

name: http

selector:

app: nginx-server

type: NodePort这里我们部署的应用是在 80 端口的 /status/format/prometheus 这个端点暴露 nginx-vts 指标的,前面我们已经在 Prometheus 中配置了 Endpoints 的自动发现,所以我们直接在 Service 对象的 annotations 中进行配置,这样我们就可以在 Prometheus 中采集该指标数据了。为了测试方便,我们这里使用 NodePort 类型的 Service,现在直接创建上面的资源对象即可:

➜ k8s-prom git:(main) ✗ k apply -f demoyaml/demo.yaml

deployment.apps/hpa-prom-demo created

service/hpa-prom-demo created

➜ k8s-prom git:(main) ✗ k get svc,pod

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/hpa-prom-demo NodePort 172.17.255.253 <none> 80:31150/TCP 5s

service/kubernetes ClusterIP 172.17.252.1 <none> 443/TCP 161m

NAME READY STATUS RESTARTS AGE

pod/hpa-prom-demo-bbb6c65bb-shtjc 1/1 Running 0 6s部署完成后我们可以使用如下命令测试应用是否正常,以及指标数据接口能够正常获取:

[root@VM-1-6-centos ~]# curl 172.17.255.253

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@VM-1-6-centos ~]# curl 172.17.255.253/status/format/prometheus

# HELP nginx_vts_info Nginx info

# TYPE nginx_vts_info gauge

nginx_vts_info{hostname="hpa-prom-demo-bbb6c65bb-shtjc",version="1.13.12"} 1

# HELP nginx_vts_start_time_seconds Nginx start time

# TYPE nginx_vts_start_time_seconds gauge

nginx_vts_start_time_seconds 1654525705.287

# HELP nginx_vts_main_connections Nginx connections

# TYPE nginx_vts_main_connections gauge

nginx_vts_main_connections{status="accepted"} 4

nginx_vts_main_connections{status="active"} 3

nginx_vts_main_connections{status="handled"} 4

nginx_vts_main_connections{status="reading"} 0

nginx_vts_main_connections{status="requests"} 13

nginx_vts_main_connections{status="waiting"} 2

nginx_vts_main_connections{status="writing"} 1

# HELP nginx_vts_main_shm_usage_bytes Shared memory [ngx_http_vhost_traffic_status] info

# TYPE nginx_vts_main_shm_usage_bytes gauge

nginx_vts_main_shm_usage_bytes{shared="max_size"} 1048575

nginx_vts_main_shm_usage_bytes{shared="used_size"} 3510

nginx_vts_main_shm_usage_bytes{shared="used_node"} 1

# HELP nginx_vts_server_bytes_total The request/response bytes

# TYPE nginx_vts_server_bytes_total counter

# HELP nginx_vts_server_requests_total The requests counter

# TYPE nginx_vts_server_requests_total counter

# HELP nginx_vts_server_request_seconds_total The request processing time in seconds

# TYPE nginx_vts_server_request_seconds_total counter

# HELP nginx_vts_server_request_seconds The average of request processing times in seconds

# TYPE nginx_vts_server_request_seconds gauge

# HELP nginx_vts_server_request_duration_seconds The histogram of request processing time

# TYPE nginx_vts_server_request_duration_seconds histogram

# HELP nginx_vts_server_cache_total The requests cache counter

# TYPE nginx_vts_server_cache_total counter

nginx_vts_server_bytes_total{host="_",direction="in"} 2861

nginx_vts_server_bytes_total{host="_",direction="out"} 42991

nginx_vts_server_requests_total{host="_",code="1xx"} 0

nginx_vts_server_requests_total{host="_",code="2xx"} 12

nginx_vts_server_requests_total{host="_",code="3xx"} 0

nginx_vts_server_requests_total{host="_",code="4xx"} 0

nginx_vts_server_requests_total{host="_",code="5xx"} 0

nginx_vts_server_requests_total{host="_",code="total"} 12

nginx_vts_server_request_seconds_total{host="_"} 0.000

nginx_vts_server_request_seconds{host="_"} 0.000

nginx_vts_server_cache_total{host="_",status="miss"} 0

nginx_vts_server_cache_total{host="_",status="bypass"} 0

nginx_vts_server_cache_total{host="_",status="expired"} 0

nginx_vts_server_cache_total{host="_",status="stale"} 0

nginx_vts_server_cache_total{host="_",status="updating"} 0

nginx_vts_server_cache_total{host="_",status="revalidated"} 0

nginx_vts_server_cache_total{host="_",status="hit"} 0

nginx_vts_server_cache_total{host="_",status="scarce"} 0

nginx_vts_server_bytes_total{host="*",direction="in"} 2861

nginx_vts_server_bytes_total{host="*",direction="out"} 42991

nginx_vts_server_requests_total{host="*",code="1xx"} 0

nginx_vts_server_requests_total{host="*",code="2xx"} 12

nginx_vts_server_requests_total{host="*",code="3xx"} 0

nginx_vts_server_requests_total{host="*",code="4xx"} 0

nginx_vts_server_requests_total{host="*",code="5xx"} 0

nginx_vts_server_requests_total{host="*",code="total"} 12

nginx_vts_server_request_seconds_total{host="*"} 0.000

nginx_vts_server_request_seconds{host="*"} 0.000

nginx_vts_server_cache_total{host="*",status="miss"} 0

nginx_vts_server_cache_total{host="*",status="bypass"} 0

nginx_vts_server_cache_total{host="*",status="expired"} 0

nginx_vts_server_cache_total{host="*",status="stale"} 0

nginx_vts_server_cache_total{host="*",status="updating"} 0

nginx_vts_server_cache_total{host="*",status="revalidated"} 0

nginx_vts_server_cache_total{host="*",status="hit"} 0

nginx_vts_server_cache_total{host="*",status="scarce"} 0上面的指标数据中,我们比较关心的是 nginx_vts_server_requests_total 这个指标,表示请求总数,是一个 Counter 类型的指标,我们将使用该指标的值来确定是否需要对我们的应用进行自动扩缩容。

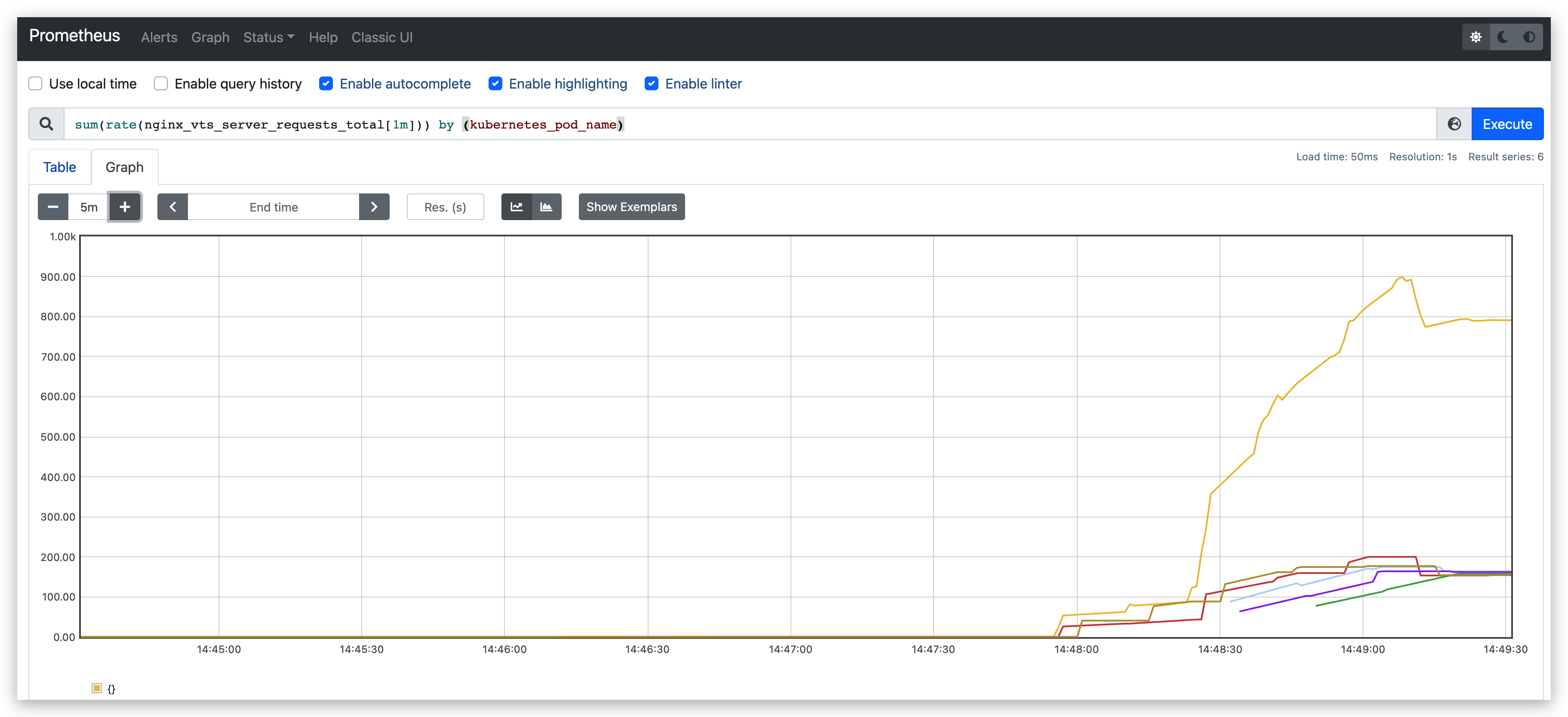

接下来我们将 Prometheus-Adapter 安装到集群中,并添加一个规则来跟踪 Pod 的请求,我们可以将 Prometheus 中的任何一个指标都用于 HPA,但是前提是你得通过查询语句将它拿到(包括指标名称和其对应的值)。

这里我们定义一个如下所示的规则:

rules:

- seriesQuery: 'nginx_vts_server_requests_total'

seriesFilters: []

resources:

overrides:

kubernetes_namespace:

resource: namespace

kubernetes_pod_name:

resource: pod

name:

matches: "^(.*)_total"

as: "${1}_per_second"

metricsQuery: (sum(rate(<<.Series>>{<<.LabelMatchers>>}[1m])) by (<<.GroupBy>>))这是一个带参数的 Prometheus 查询,其中:

seriesQuery:查询 Prometheus 的语句,通过这个查询语句查询到的所有指标都可以用于 HPAseriesFilters:查询到的指标可能会存在不需要的,可以通过它过滤掉。resources:通过seriesQuery查询到的只是指标,如果需要查询某个 Pod 的指标,肯定要将它的名称和所在的命名空间作为指标的标签进行查询,resources就是将指标的标签和 k8s 的资源类型关联起来,最常用的就是 pod 和 namespace。有两种添加标签的方式,一种是overrides,另一种是template。overrides:它会将指标中的标签和 k8s 资源关联起来。上面示例中就是将指标中的 pod 和 namespace 标签和 k8s 中的 pod 和 namespace 关联起来,因为 pod 和 namespace 都属于核心 api 组,所以不需要指定 api 组。当我们查询某个 pod 的指标时,它会自动将 pod 的名称和名称空间作为标签加入到查询条件中。比如nginx: {group: "apps", resource: "deployment"}这么写表示的就是将指标中 nginx 这个标签和 apps 这个 api 组中的deployment资源关联起来;- template:通过 go 模板的形式。比如

template: "kube_<<.Group>>_<<.Resource>>"这么写表示,假如<<.Group>>为 apps,<<.Resource>>为 deployment,那么它就是将指标中kube_apps_deployment标签和 deployment 资源关联起来。

name:用来给指标重命名的,之所以要给指标重命名是因为有些指标是只增的,比如以 total 结尾的指标。这些指标拿来做 HPA 是没有意义的,我们一般计算它的速率,以速率作为值,那么此时的名称就不能以 total 结尾了,所以要进行重命名。matches:通过正则表达式来匹配指标名,可以进行分组as:默认值为$1,也就是第一个分组。as为空就是使用默认值的意思。

metricsQuery:这就是 Prometheus 的查询语句了,前面的seriesQuery查询是获得 HPA 指标。当我们要查某个指标的值时就要通过它指定的查询语句进行了。可以看到查询语句使用了速率和分组,这就是解决上面提到的只增指标的问题。Series:表示指标名称LabelMatchers:附加的标签,目前只有pod和namespace两种,因此我们要在之前使用resources进行关联GroupBy:就是 pod 名称,同样需要使用resources进行关联。

2.3 Prometheus-adapter

生成key

➜ k8s-prom git:(main) ✗ openssl genrsa -out serving.key 2048

➜ k8s-prom git:(main) ✗ openssl req -new -key serving.key -out serving.csr -subj "/CN=serving"

➜ k8s-prom git:(main) ✗ openssl x509 -req -in serving.csr -CA /etc/kubernetes/pki/ca.crt -CAkey /etc/kubernetes/pki/ca.key -CAcreateserial -out serving.crt -days 3650

➜ k8s-prom git:(main) ✗ kubectl create secret generic cm-adapter-serving-certs --from-file=./serving.key --from-file=./serving.crt -n prom部署 prometheus-adapter

一个 Kubernetes 集群,在同时存在

v1beta1.custom.metrics.k8s.io和v1beta2.custom.metrics.k8s.io时,HPA 配置的自定义指标扩缩容会从哪里读取指标呢?这里为了做实验,将 v1beta1.custom.metrics.k8s.io 修改为 v1beta2.custom.metrics.k8s.io

➜ k8s-prom git:(main) ✗ kubectl apply -f prometheus-adapter/

clusterrolebinding.rbac.authorization.k8s.io/custom-metrics:system:auth-delegator created

rolebinding.rbac.authorization.k8s.io/custom-metrics-auth-reader created

deployment.apps/custom-metrics-apiserver created

clusterrolebinding.rbac.authorization.k8s.io/custom-metrics-resource-reader created

serviceaccount/custom-metrics-apiserver created

service/custom-metrics-apiserver created

apiservice.apiregistration.k8s.io/v1beta2.custom.metrics.k8s.io created

apiservice.apiregistration.k8s.io/v1beta1.external.metrics.k8s.io created

clusterrole.rbac.authorization.k8s.io/custom-metrics-server-resources created

configmap/adapter-config created

clusterrole.rbac.authorization.k8s.io/custom-metrics-resource-reader created

clusterrolebinding.rbac.authorization.k8s.io/hpa-controller-custom-metrics created➜ k8s-prom git:(main) ✗ k get po -n prom

NAME READY STATUS RESTARTS AGE

custom-metrics-apiserver-9cb8b858b-rtf46 1/1 Running 0 6m17s

prometheus-69fd7945d8-5zkgc 1/1 Running 0 14m➜ k8s-prom git:(main) ✗ k get apiservice | grep -E "metrics-servic|metrics.k8s.io"

v1beta1.custom.metrics.k8s.io kube-system/hpa-metrics-service True 172m

v1beta2.custom.metrics.k8s.io prom/custom-metrics-apiserver True 7m13s

v1beta1.metrics.k8s.io kube-system/metrics-service True 172m➜ k8s-prom git:(main) ✗ kubectl get --raw="/apis/custom.metrics.k8s.io/v1beta2" | jq

{

"kind": "APIResourceList",

"apiVersion": "v1",

"groupVersion": "custom.metrics.k8s.io/v1beta2",

"resources": [

{

"name": "namespaces/nginx_vts_server_requests_per_second",

"singularName": "",

"namespaced": false,

"kind": "MetricValueList",

"verbs": [

"get"

]

},

{

"name": "pods/nginx_vts_server_requests_per_second",

"singularName": "",

"namespaced": true,

"kind": "MetricValueList",

"verbs": [

"get"

]

}

]

}我们可以看到 nginx_vts_server_requests_per_second 指标可用。 现在,让我们检查该指标的当前值:

➜ k8s-prom git:(main) ✗ kubectl get --raw "/apis/custom.metrics.k8s.io/v1beta2/namespaces/default/pods/*/nginx_vts_server_requests_per_second" | jq .

{

"kind": "MetricValueList",

"apiVersion": "custom.metrics.k8s.io/v1beta2",

"metadata": {

"selfLink": "/apis/custom.metrics.k8s.io/v1beta2/namespaces/default/pods/%2A/nginx_vts_server_requests_per_second"

},

"items": [

{

"describedObject": {

"kind": "Pod",

"namespace": "default",

"name": "hpa-prom-demo-bbb6c65bb-shtjc",

"apiVersion": "/v1"

},

"metric": {

"name": "nginx_vts_server_requests_per_second",

"selector": null

},

"timestamp": "2022-06-06T14:41:40Z",

"value": "533m"

}

]

}出现类似上面的信息就表明已经配置成功了。

3 验证

3.1 部署HPA

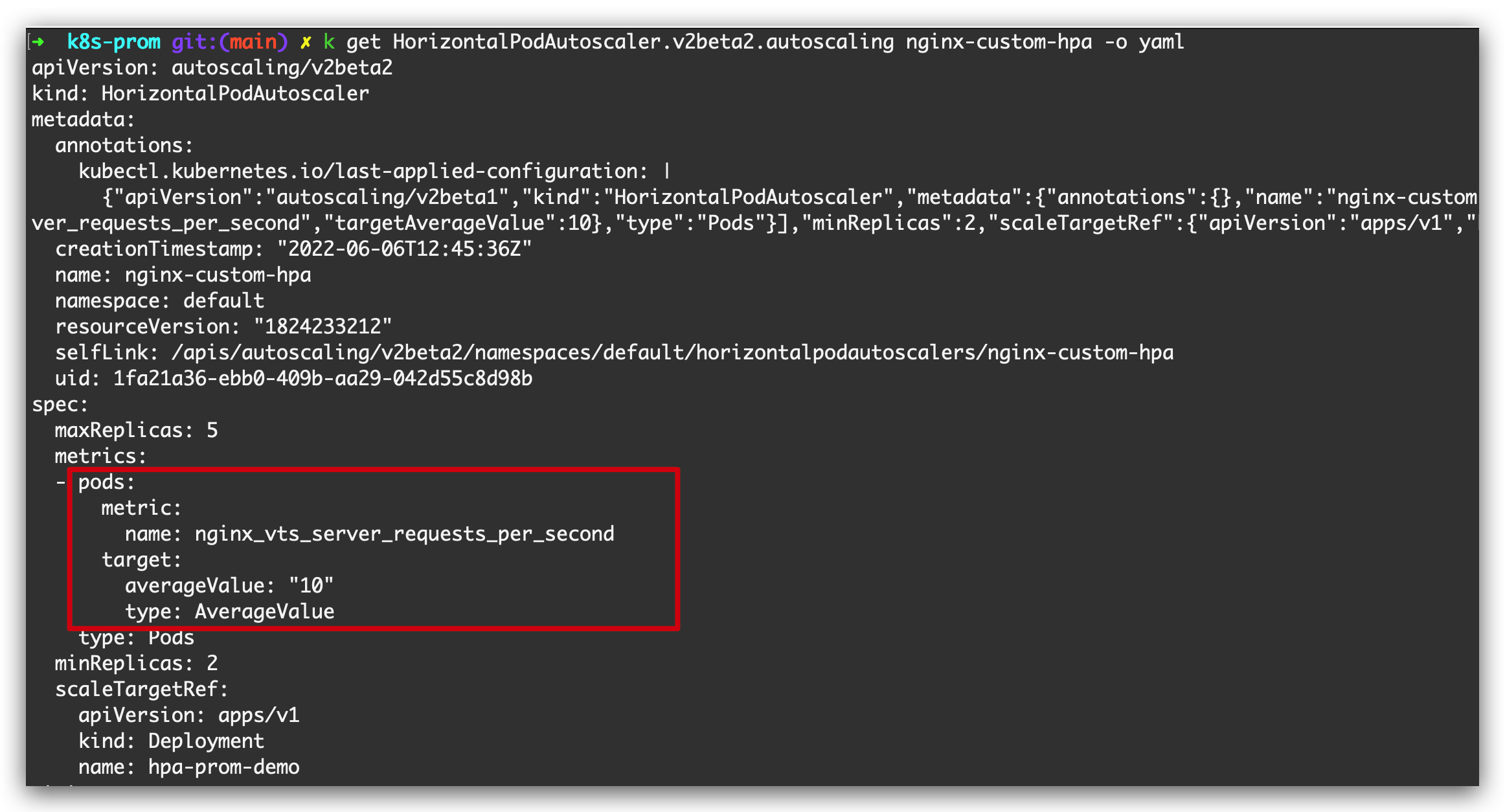

接下来我们部署一个针对上面的自定义指标的 HAP 资源对象,如下所示

apiVersion: autoscaling/v2beta1

kind: HorizontalPodAutoscaler

metadata:

name: nginx-custom-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: hpa-prom-demo

minReplicas: 2

maxReplicas: 5

metrics:

- type: Pods

pods:

metricName: nginx_vts_server_requests_per_second

targetAverageValue: 10如果请求数超过每秒10个,则将对应用进行扩容。直接创建上面的资源对象:

➜ k8s-prom git:(main) ✗ k get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

nginx-custom-hpa Deployment/hpa-prom-demo 533m/10 2 5 2 43s

Name: nginx-custom-hpa

Namespace: default

Labels: <none>

Annotations: <none>

CreationTimestamp: Mon, 06 Jun 2022 22:45:28 +0800

Reference: Deployment/hpa-prom-demo

Metrics: ( current / target )

"nginx_vts_server_requests_per_second" on pods: 533m / 10

Min replicas: 2

Max replicas: 5

Deployment pods: 2 current / 2 desired

Conditions:

Type Status Reason Message

---- ------ ------ -------

AbleToScale True ReadyForNewScale recommended size matches current size

ScalingActive True ValidMetricFound the HPA was able to successfully calculate a replica count from pods metric nginx_vts_server_requests_per_second

ScalingLimited False DesiredWithinRange the desired count is within the acceptable range

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulRescale 22s horizontal-pod-autoscaler New size: 2; reason: Current number of replicas below Spec.MinReplicas可以看到 HPA 对象已经生效了,会应用最小的副本数2,所以会新增一个 Pod 副本:

➜ k8s-prom git:(main) ✗ k get po

NAME READY STATUS RESTARTS AGE

hpa-prom-demo-bbb6c65bb-5z2gt 1/1 Running 0 74s

hpa-prom-demo-bbb6c65bb-shtjc 1/1 Running 0 18m接下来我们同样对应用进行压测:

while true; do wget -q -O- http://172.17.255.253; done➜ k8s-prom git:(main) ✗ k get po

NAME READY STATUS RESTARTS AGE

hpa-prom-demo-bbb6c65bb-5259g 1/1 Running 0 16s

hpa-prom-demo-bbb6c65bb-5z2gt 1/1 Running 0 2m35s

hpa-prom-demo-bbb6c65bb-dzbln 0/1 ContainerCreating 0 1s

hpa-prom-demo-bbb6c65bb-pr6gc 1/1 Running 0 16s

hpa-prom-demo-bbb6c65bb-shtjc 1/1 Running 0 19m可以看到指标 nginx_vts_server_requests_per_second 的数据已经超过阈值了,触发扩容动作了。

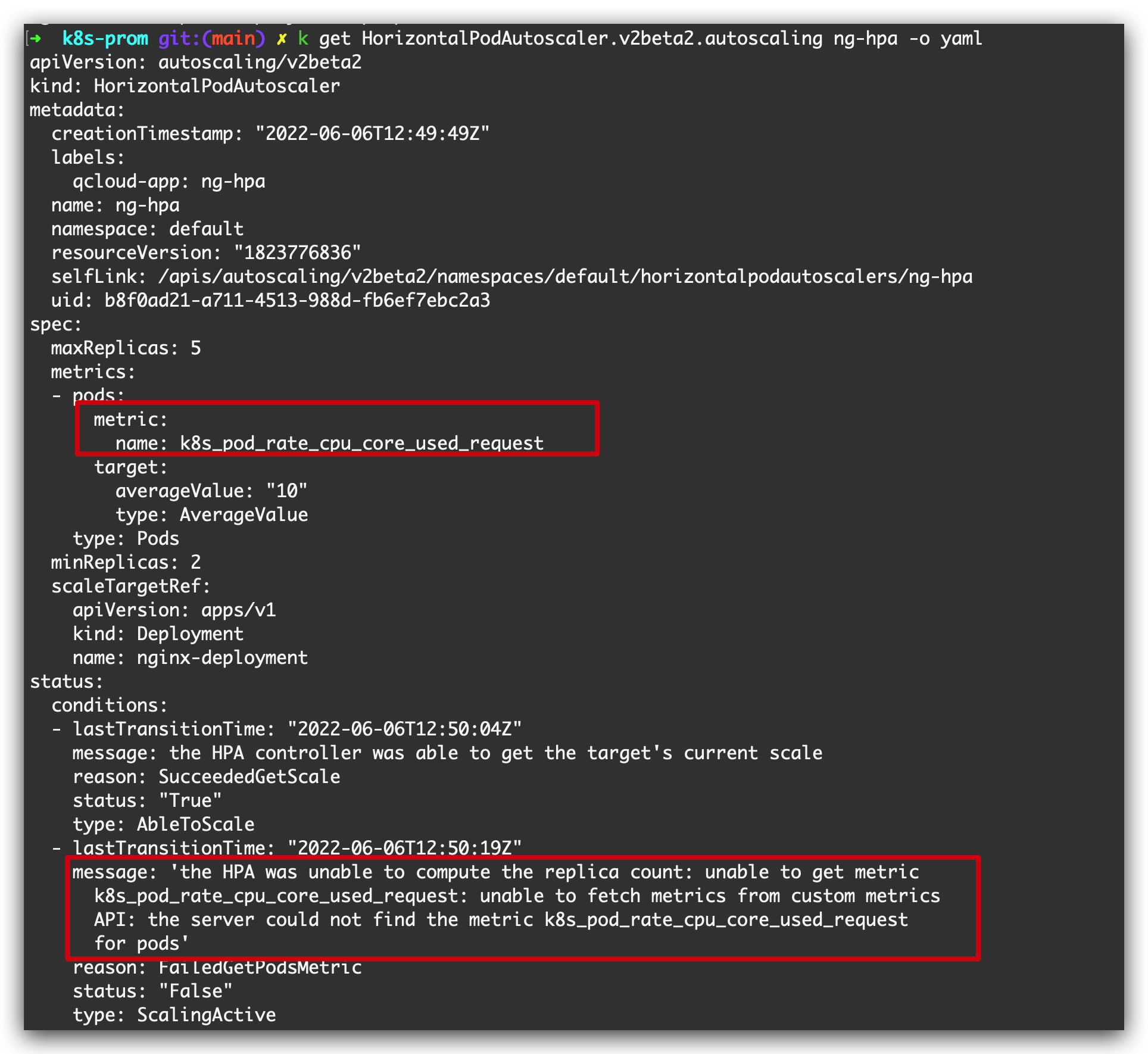

3.2 v1beta1 or v1beta2?

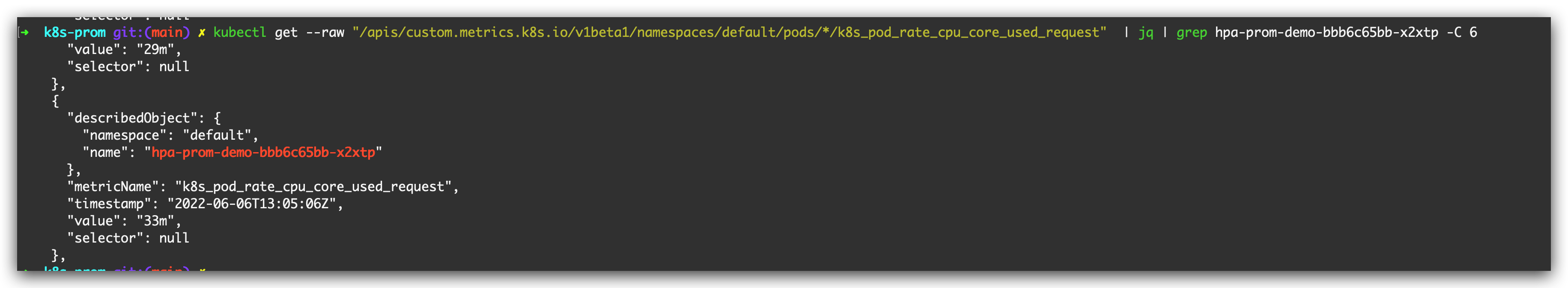

为了对比测试,我们部署一个 HPA,其指标来自 v1beta1 中

k8s-prom git:(main) ✗ kubectl apply -f demoyaml/nginx-dp.yaml

deployment.apps/nginx-deployment createdapiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

labels:

qcloud-app: ng-hpa

name: ng-hpa

namespace: default

spec:

maxReplicas: 5

metrics:

- pods:

metric:

name: k8s_pod_rate_cpu_core_used_request

target:

averageValue: "10"

type: AverageValue

type: Pods

minReplicas: 2

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: hpa-prom-demo可以看到,v1beta1的指标提示未找到

v1beta2的指标正常

手动访问 v1beta1,查询该Pod指标,获取正常

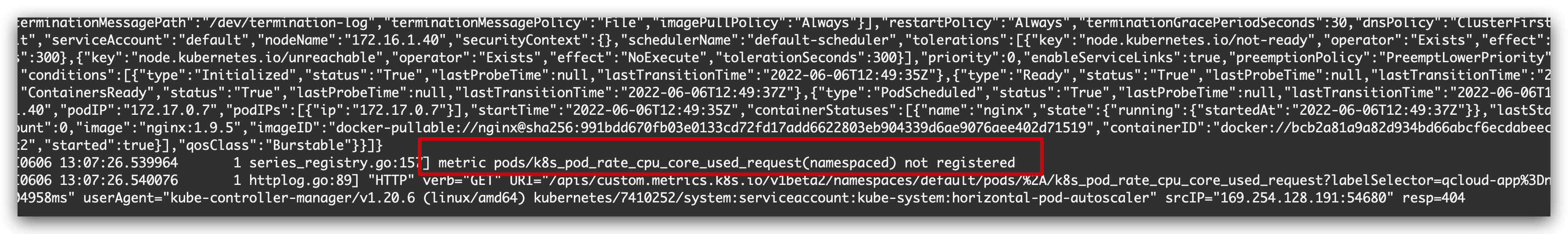

查看 prometheus-adapter日志中有如下报错,提示该指标未注册

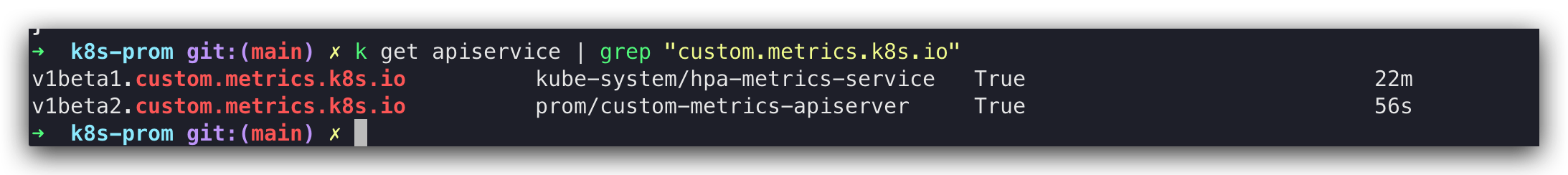

所以,当集群中同时存在 v1beta1.custom.metrics.k8s.io 和v1beta2.custom.metrics.k8s.io 时,v1beta2会覆盖v1beta1的指标,导致 v1beta1.custom.metrics.k8s.io 对应的指标无法正常使用HPA。

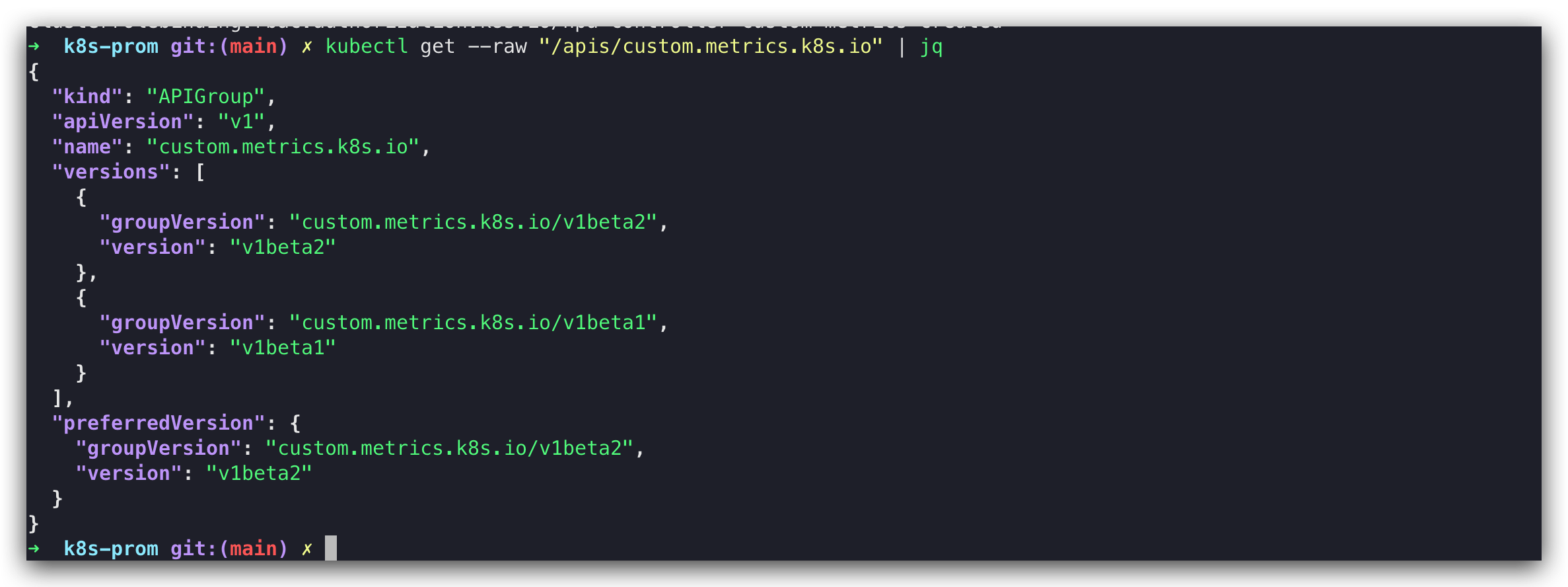

当集群中只有 v1beta1时:

➜ k8s-prom git:(main) ✗ kubectl get --raw "/apis/custom.metrics.k8s.io" | jq

{

"kind": "APIGroup",

"apiVersion": "v1",

"name": "custom.metrics.k8s.io",

"versions": [

{

"groupVersion": "custom.metrics.k8s.io/v1beta1",

"version": "v1beta1"

}

],

"preferredVersion": {

"groupVersion": "custom.metrics.k8s.io/v1beta1",

"version": "v1beta1"

}

}增加 v1beta2

可以看到,preferdVersion为v1beta2。