共计 27845 个字符,预计需要花费 70 分钟才能阅读完成。

1 Thanos 架构设计剖析

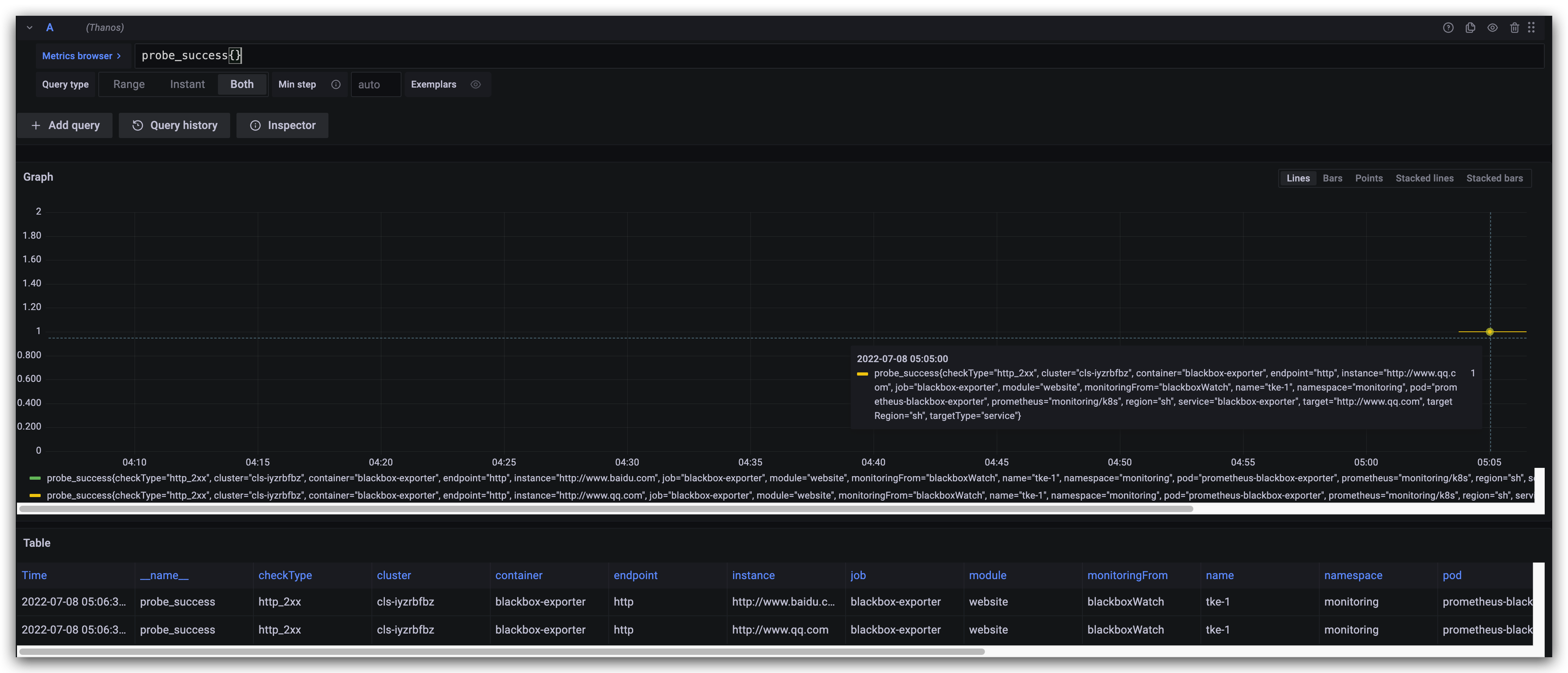

这张图中包含了 Thanos 的几个核心组件,但并不包括所有组件,为了便于理解,我们先不细讲,简单介绍下图中这几个组件的作用:

- Thanos Query: 实现了 Prometheus API,将来自下游组件提供的数据进行聚合最终返回给查询数据的 client (如 grafana),类似数据库中间件。

- Thanos Sidecar: 连接 Prometheus,将其数据提供给 Thanos Query 查询,并且/或者将其上传到对象存储,以供长期存储。

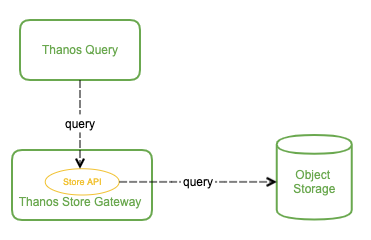

- Thanos Store Gateway: 将对象存储的数据暴露给 Thanos Query 去查询。

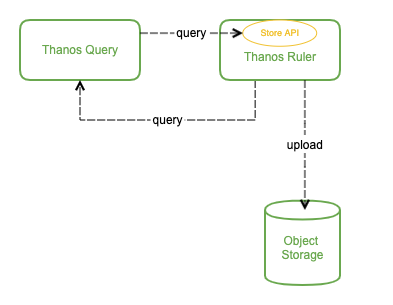

- Thanos Ruler: 对监控数据进行评估和告警,还可以计算出新的监控数据,将这些新数据提供给 Thanos Query 查询并且/或者上传到对象存储,以供长期存储。

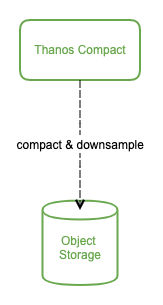

- Thanos Compact: 将对象存储中的数据进行压缩和降低采样率,加速大时间区间监控数据查询的速度。

1.1 Query 与 Sidecar

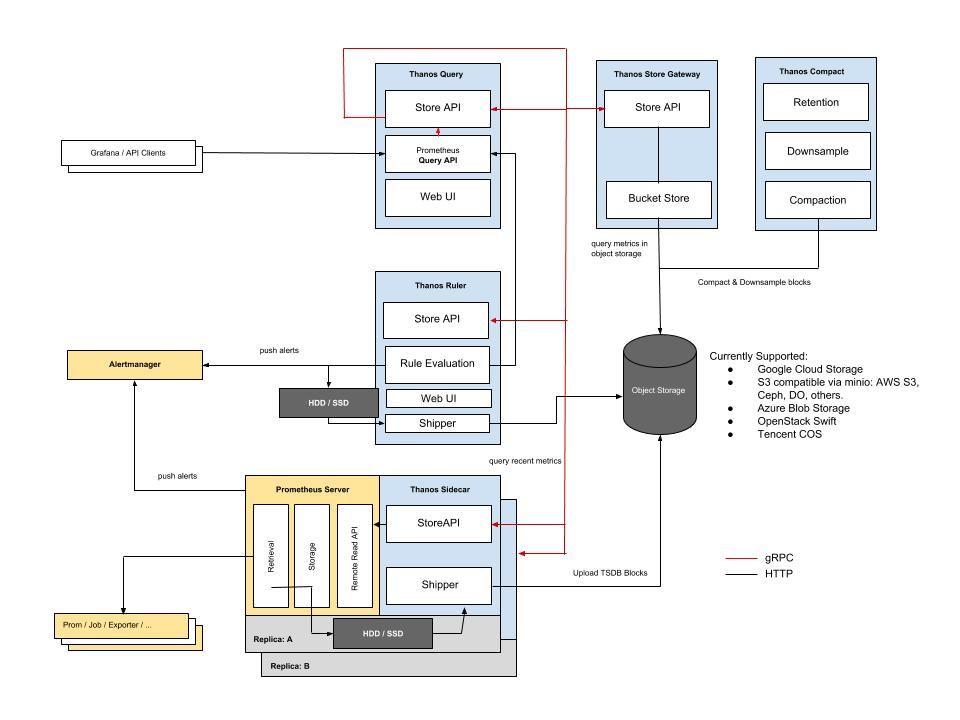

首先,监控数据的查询肯定不能直接查 Prometheus 了,因为会存在许多个 Prometheus 实例,每个 Prometheus 实例只能感知它自己所采集的数据。我们可以比较容易联想到数据库中间件,每个数据库都只存了一部分数据,中间件能感知到所有数据库,数据查询都经过数据库中间件来查,这个中间件收到查询请求再去查下游各个数据库中的数据,最后将这些数据聚合汇总返回给查询的客户端,这样就实现了将分布式存储的数据集中查询。

实际上,Thanos 也是使用了类似的设计思想,Thanos Query 就是这个 "中间件" 的关键入口。它实现了 Prometheus 的 HTTP API,能够 "看懂" PromQL。这样,查询 Prometheus 监控数据的 client 就不直接查询 Prometheus 本身了,而是去查询 Thanos Query,Thanos Query 再去下游多个存储了数据的地方查数据,最后将这些数据聚合去重后返回给 client,也就实现了分布式 Prometheus 的数据查询。

那么 Thanos Query 又如何去查下游分散的数据呢?Thanos 为此抽象了一套叫 Store API 的内部 gRPC 接口,其它一些组件通过这个接口来暴露数据给 Thanos Query,它自身也就可以做到完全无状态部署,实现高可用与动态扩展。

这些分散的数据可能来自哪些地方呢?首先,Prometheus 会将采集的数据存到本机磁盘上,如果我们直接用这些分散在各个磁盘上的数据,可以给每个 Prometheus 附带部署一个 Sidecar,这个 Sidecar 实现 Thanos Store API,当 Thanos Query 对其发起查询时,Sidecar 就读取跟它绑定部署的 Prometheus 实例上的监控数据返回给 Thanos Query。

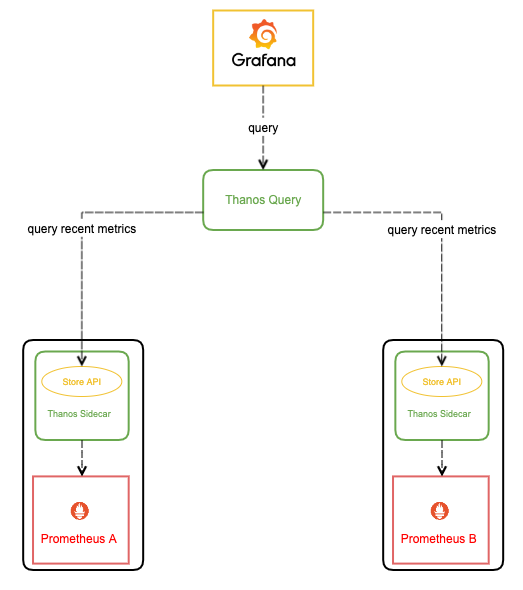

由于 Thanos Query 可以对数据进行聚合与去重,所以可以很轻松实现高可用:相同的 Prometheus 部署多个副本(都附带 Sidecar),然后 Thanos Query 去所有 Sidecar 查数据,即便有一个 Prometheus 实例挂掉过一段时间,数据聚合与去重后仍然能得到完整数据。

这种高可用做法还弥补了我们上篇文章中用负载均衡去实现 Prometheus 高可用方法的缺陷:如果其中一个 Prometheus 实例挂了一段时间然后又恢复了,它的数据就不完整,当负载均衡转发到它上面去查数据时,返回的结果就可能会有部分缺失。

不过因为磁盘空间有限,所以 Prometheus 存储监控数据的能力也是有限的,通常会给 Prometheus 设置一个数据过期时间 (默认15天) 或者最大数据量大小,不断清理旧数据以保证磁盘不被撑爆。因此,我们无法看到时间比较久远的监控数据,有时候这也给我们的问题排查和数据统计造成一些困难。

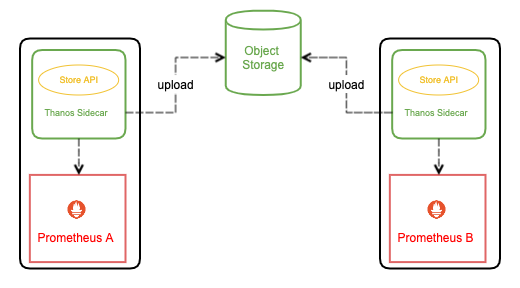

对于需要长期存储的数据,并且使用频率不那么高,最理想的方式是存进对象存储,各大云厂商都有对象存储服务,特点是不限制容量,价格非常便宜。

Thanos 有几个组件都支持将数据上传到各种对象存储以供长期保存 (Prometheus TSDB 数据格式),比如我们刚刚说的 Sidecar:

1.2 Store Gateway

那么这些被上传到了对象存储里的监控数据该如何查询呢?理论上 Thanos Query 也可以直接去对象存储查,但会让 Thanos Query 的逻辑变的很重。我们刚才也看到了,Thanos 抽象出了 Store API,只要实现了该接口的组件都可以作为 Thanos Query 查询的数据源,Thanos Store Gateway 这个组件也实现了 Store API,向 Thanos Query 暴露对象存储的数据。Thanos Store Gateway 内部还做了一些加速数据获取的优化逻辑,一是缓存了 TSDB 索引,二是优化了对象存储的请求 (用尽可能少的请求量拿到所有需要的数据)。

这样就实现了监控数据的长期储存,由于对象存储容量无限,所以理论上我们可以存任意时长的数据,监控历史数据也就变得可追溯查询,便于问题排查与统计分析。

1.3 Ruler

有一个问题,Prometheus 不仅仅只支持将采集的数据进行存储和查询的功能,还可以配置一些 rules:

- 根据配置不断计算出新指标数据并存储,后续查询时直接使用计算好的新指标,这样可以减轻查询时的计算压力,加快查询速度。

- 不断计算和评估是否达到告警阀值,当达到阀值时就通知 AlertManager 来触发告警。

由于我们将 Prometheus 进行分布式部署,每个 Prometheus 实例本地并没有完整数据,有些有关联的数据可能存在多个 Prometheus 实例中,单机 Prometheus 看不到数据的全局视图,这种情况我们就不能依赖 Prometheus 来做这些工作,Thanos Ruler 应运而生,它通过查询 Thanos Query 获取全局数据,然后根据 rules 配置计算新指标并存储,同时也通过 Store API 将数据暴露给 Thanos Query,同样还可以将数据上传到对象存储以供长期保存 (这里上传到对象存储中的数据一样也是通过 Thanos Store Gateway 暴露给 Thanos Query)。

看起来 Thanos Query 跟 Thanos Ruler 之间会相互查询,不过这个不冲突,Thanos Ruler 为 Thanos Query 提供计算出的新指标数据,而 Thanos Query 为 Thanos Ruler 提供计算新指标所需要的全局原始指标数据。

至此,Thanos 的核心能力基本实现了,完全兼容 Prometheus 的情况下提供数据查询的全局视图,高可用以及数据的长期保存。

看下还可以怎么进一步做下优化呢?

1.4 Compact

由于我们有数据长期存储的能力,也就可以实现查询较大时间范围的监控数据,当时间范围很大时,查询的数据量也会很大,这会导致查询速度非常慢。通常在查看较大时间范围的监控数据时,我们并不需要那么详细的数据,只需要看到大致就行。Thanos Compact 这个组件应运而生,它读取对象存储的数据,对其进行压缩以及降采样再上传到对象存储,这样在查询大时间范围数据时就可以只读取压缩和降采样后的数据,极大地减少了查询的数据量,从而加速查询。

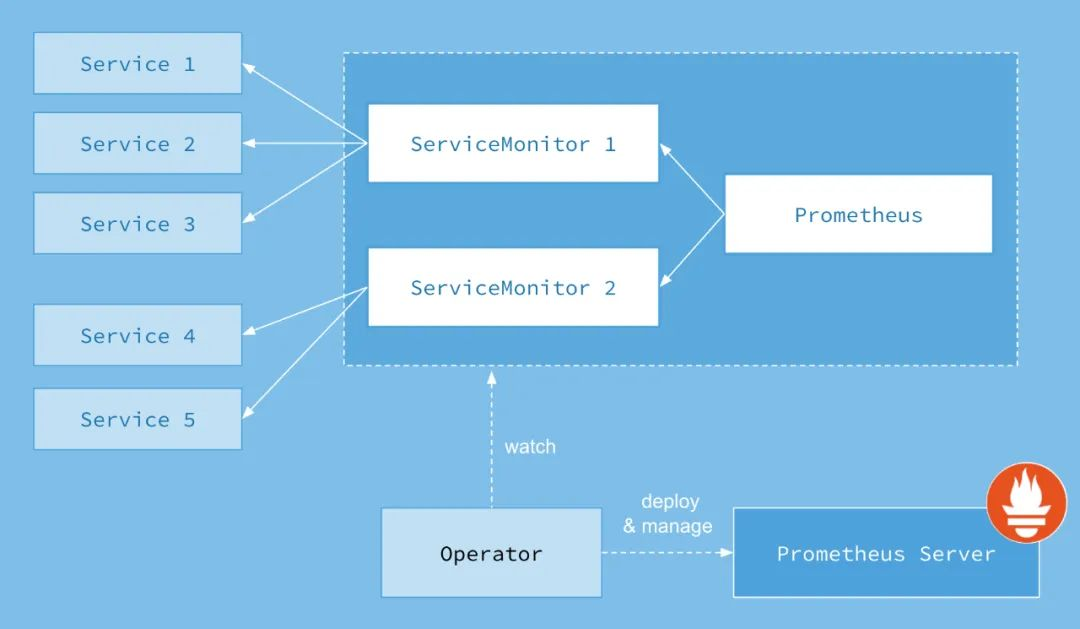

当前 thanos 在 Kubernetes 上部署有以下三种:

- prometheus-operator: 集群中安装了 prometheus-operator 后,就可以通过创建 CRD 对象来部署 Thanos 了。

- 社区贡献的一些 helm charts: 很多个版本,目标都是能够使用 helm 来一键部署 thanos。

- kube-thanos: Thanos 官方的开源项目,包含部署 thanos 到 kubernetes 的 jsonnet 模板与 yaml 示例。

2 Thanos多Kubernetes集群架构

- 上图将 3 个集群的数据分别收集到 3 个对象存储 COS 中,可以配置到一个中

- 集群 A 中的 Thanos Store 和 Thanos Query 可根据情况分配,部署示例中使用一个

[root@VM-1-19-centos ~]# git clone https://github.com/honest1y/kubernetes_yaml.git

[root@VM-1-19-centos ~]# ls

kubernetes_yaml

[root@VM-1-19-centos ~]# cd kubernetes_yaml/thanos/thanos-server/直接在该文件夹下面执行创建资源命令即可

2.1 部署CRD

部署集群A

Prometheus 采用 Pormetheus-Operator 方式部署

[root@VM-1-19-centos thanos-server]# kubectl create -f setup/

customresourcedefinition.apiextensions.k8s.io/alertmanagerconfigs.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/alertmanagers.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/podmonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/probes.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheuses.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheusrules.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/servicemonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/thanosrulers.monitoring.coreos.com created

namespace/monitoring created部署完成后,会创建一个名为monitoring的 namespace,所以资源对象对将部署在改命名空间下面,此外 Operator 会自动创建8个 CRD 资源对象:

[root@VM-1-19-centos thanos-server]# kubectl get crd | grep coreos

alertmanagerconfigs.monitoring.coreos.com 2022-07-07T07:26:48Z

alertmanagers.monitoring.coreos.com 2022-07-07T07:26:49Z

podmonitors.monitoring.coreos.com 2022-07-07T07:26:50Z

probes.monitoring.coreos.com 2022-07-07T07:26:50Z

prometheuses.monitoring.coreos.com 2022-07-07T07:26:50Z

prometheusrules.monitoring.coreos.com 2022-07-07T07:26:51Z

servicemonitors.monitoring.coreos.com 2022-07-07T07:26:52Z

thanosrulers.monitoring.coreos.com 2022-07-07T07:26:52Z因为实验环境方便对外访问,我们还需要修改几个地方的暴露方式为nodeport

[root@VM-1-19-centos thanos-server]# vim grafana-service.yaml

spec:

ports:

- name: http

port: 3000

targetPort: http

type: NodePort

[root@VM-1-19-centos thanos-server]# vim prometheus-service.yaml

spec:

ports:

- name: web

port: 9090

targetPort: web

- name: reloader-web

port: 8080

targetPort: reloader-web

type: NodePort

[root@VM-1-19-centos thanos-server]# vim alertmanager-service.yaml

spec:

ports:

- name: web

port: 9093

targetPort: web

- name: reloader-web

port: 8080

targetPort: reloader-web

type: NodePort2.2 安装 Prometheus

[root@VM-1-19-centos thanos-server]# kubectl create -f prometheus-operator/.

alertmanager.monitoring.coreos.com/main created

networkpolicy.networking.k8s.io/alertmanager-main created

prometheusrule.monitoring.coreos.com/alertmanager-main-rules created

secret/alertmanager-main created

service/alertmanager-main created

serviceaccount/alertmanager-main created

servicemonitor.monitoring.coreos.com/alertmanager-main created

clusterrole.rbac.authorization.k8s.io/blackbox-exporter created

clusterrolebinding.rbac.authorization.k8s.io/blackbox-exporter created

configmap/blackbox-exporter-configuration created

deployment.apps/blackbox-exporter created

networkpolicy.networking.k8s.io/blackbox-exporter created

service/blackbox-exporter created

serviceaccount/blackbox-exporter created

servicemonitor.monitoring.coreos.com/blackbox-exporter created

secret/grafana-config created

secret/grafana-datasources created

configmap/grafana-dashboard-alertmanager-overview created

configmap/grafana-dashboard-apiserver created

configmap/grafana-dashboard-cluster-total created

configmap/grafana-dashboard-controller-manager created

configmap/grafana-dashboard-grafana-overview created

configmap/grafana-dashboard-k8s-resources-cluster created

configmap/grafana-dashboard-k8s-resources-namespace created

configmap/grafana-dashboard-k8s-resources-node created

configmap/grafana-dashboard-k8s-resources-pod created

configmap/grafana-dashboard-k8s-resources-workload created

configmap/grafana-dashboard-k8s-resources-workloads-namespace created

configmap/grafana-dashboard-kubelet created

configmap/grafana-dashboard-namespace-by-pod created

configmap/grafana-dashboard-namespace-by-workload created

configmap/grafana-dashboard-node-cluster-rsrc-use created

configmap/grafana-dashboard-node-rsrc-use created

configmap/grafana-dashboard-nodes-darwin created

configmap/grafana-dashboard-nodes created

configmap/grafana-dashboard-persistentvolumesusage created

configmap/grafana-dashboard-pod-total created

configmap/grafana-dashboard-prometheus-remote-write created

configmap/grafana-dashboard-prometheus created

configmap/grafana-dashboard-proxy created

configmap/grafana-dashboard-scheduler created

configmap/grafana-dashboard-workload-total created

configmap/grafana-dashboards created

deployment.apps/grafana created

networkpolicy.networking.k8s.io/grafana created

prometheusrule.monitoring.coreos.com/grafana-rules created

service/grafana created

serviceaccount/grafana created

servicemonitor.monitoring.coreos.com/grafana created

prometheusrule.monitoring.coreos.com/kube-prometheus-rules created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

deployment.apps/kube-state-metrics created

networkpolicy.networking.k8s.io/kube-state-metrics created

prometheusrule.monitoring.coreos.com/kube-state-metrics-rules created

service/kube-state-metrics created

serviceaccount/kube-state-metrics created

servicemonitor.monitoring.coreos.com/kube-state-metrics created

prometheusrule.monitoring.coreos.com/kubernetes-monitoring-rules created

servicemonitor.monitoring.coreos.com/kube-apiserver created

servicemonitor.monitoring.coreos.com/coredns created

servicemonitor.monitoring.coreos.com/kube-controller-manager created

servicemonitor.monitoring.coreos.com/kube-scheduler created

servicemonitor.monitoring.coreos.com/kubelet created

clusterrole.rbac.authorization.k8s.io/node-exporter created

clusterrolebinding.rbac.authorization.k8s.io/node-exporter created

daemonset.apps/node-exporter created

networkpolicy.networking.k8s.io/node-exporter created

prometheusrule.monitoring.coreos.com/node-exporter-rules created

service/node-exporter created

serviceaccount/node-exporter created

servicemonitor.monitoring.coreos.com/node-exporter created

clusterrole.rbac.authorization.k8s.io/prometheus-k8s created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-k8s created

networkpolicy.networking.k8s.io/prometheus-k8s created

prometheus.monitoring.coreos.com/k8s created

prometheusrule.monitoring.coreos.com/prometheus-k8s-prometheus-rules created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s-config created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s-config created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

service/prometheus-k8s created

serviceaccount/prometheus-k8s created

servicemonitor.monitoring.coreos.com/prometheus-k8s created

clusterrole.rbac.authorization.k8s.io/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-adapter created

clusterrolebinding.rbac.authorization.k8s.io/resource-metrics:system:auth-delegator created

clusterrole.rbac.authorization.k8s.io/resource-metrics-server-resources created

configmap/adapter-config created

deployment.apps/prometheus-adapter created

networkpolicy.networking.k8s.io/prometheus-adapter created

rolebinding.rbac.authorization.k8s.io/resource-metrics-auth-reader created

service/prometheus-adapter created

serviceaccount/prometheus-adapter created

servicemonitor.monitoring.coreos.com/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/prometheus-operator created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-operator created

deployment.apps/prometheus-operator created

networkpolicy.networking.k8s.io/prometheus-operator created

prometheusrule.monitoring.coreos.com/prometheus-operator-rules created

service/prometheus-operator created

serviceaccount/prometheus-operator created

servicemonitor.monitoring.coreos.com/prometheus-operator created如果有镜像问题导致Pod无法启动,修改镜像如下:

vim kubeStateMetrics-deployment.yaml

镜像换成 bitnami/kube-state-metrics:2.3.0

vim prometheusAdapter-deployment.yaml

镜像换成image: selina5288/prometheus-adapter:v0.9.1查看部署状态

[root@VM-1-19-centos thanos-server]# kubectl get po -n monitoring

NAME READY STATUS RESTARTS AGE

alertmanager-main-0 2/2 Running 0 4m21s

alertmanager-main-1 2/2 Running 0 5m52s

alertmanager-main-2 2/2 Running 0 3m41s

blackbox-exporter-5597bb8b7-ztb9f 3/3 Running 0 10m

grafana-5dff4b5dd5-thwnj 1/1 Running 0 10m

kube-state-metrics-fb84c8d6c-vk7wn 3/3 Running 0 9m5s

node-exporter-dlkf4 2/2 Running 0 10m

node-exporter-xtfhj 2/2 Running 0 10m

prometheus-adapter-74979cd4b5-5fcrv 1/1 Running 0 8m33s

prometheus-adapter-74979cd4b5-ggwjj 1/1 Running 0 8m33s

prometheus-k8s-0 2/2 Running 0 10m

prometheus-k8s-1 2/2 Running 0 10m

prometheus-operator-bc6bd749c-9x7c2 2/2 Running 0 10m2.3 访问管理

[root@VM-1-19-centos thanos-server]# kubectl get svc -n monitoring

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

alertmanager-main NodePort 172.17.253.159 <none> 9093:32387/TCP,8080:30491/TCP 11m

alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 10m

blackbox-exporter ClusterIP 172.17.255.43 <none> 9115/TCP,19115/TCP 11m

grafana NodePort 172.17.254.10 <none> 3000:31211/TCP 11m

kube-state-metrics ClusterIP None <none> 8443/TCP,9443/TCP 11m

node-exporter ClusterIP None <none> 9100/TCP 11m

prometheus-adapter ClusterIP 172.17.255.81 <none> 443/TCP 11m

prometheus-k8s NodePort 172.17.252.86 <none> 9090:32381/TCP,8080:32223/TCP 11m

prometheus-operated ClusterIP None <none> 9090/TCP 10m

prometheus-operator ClusterIP None <none> 8443/TCP 11m根据上面查询service的nodeport可以得出地址(43.142.54.65是其中一台nodeIP)

- Prometheus :43.142.54.65:32381

- Grafana: 43.142.54.65:31211

- Alertmanager: 43.142.54.65:32387

2.3.1 访问 Grafana

默认账号和密码都是 admin,按照操作提示修改即可

联网的话直接填写ID即可,好用的模版比如8685、8919、10000

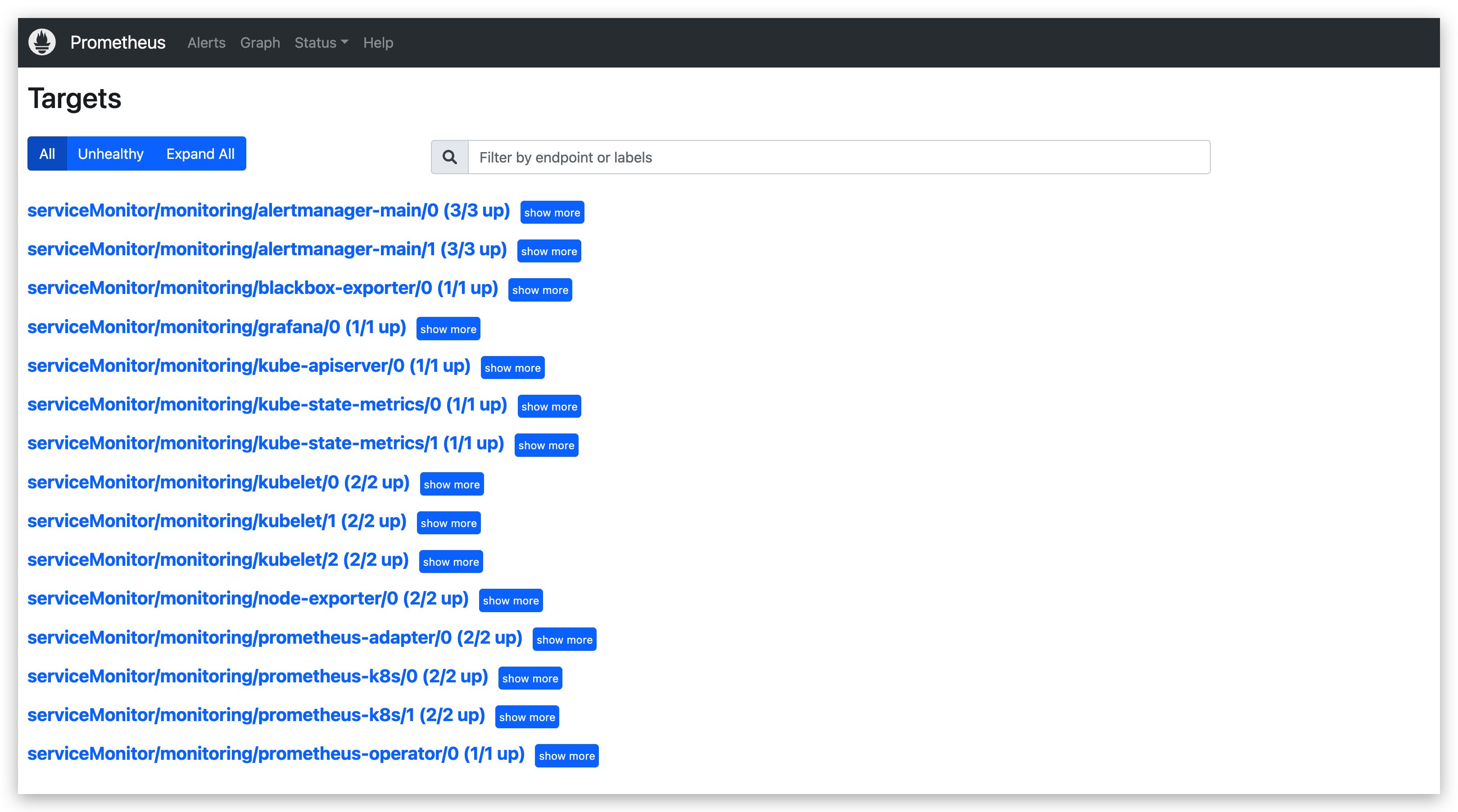

2.3.2 访问 Prometheus

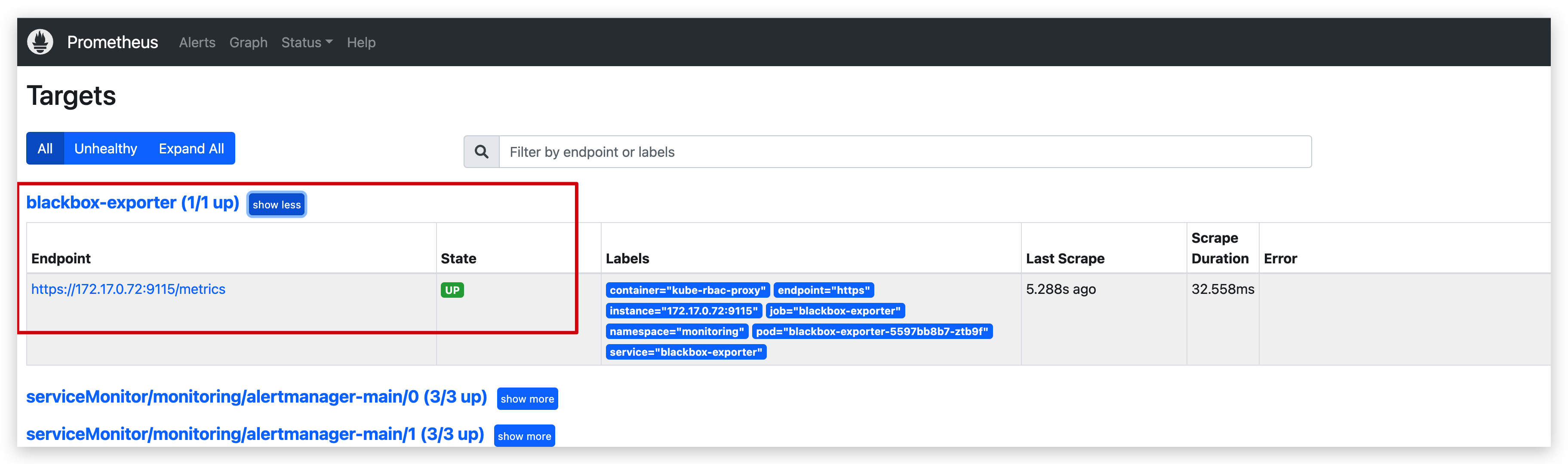

采集target全部正常

2.4 部署Thanos

2.4.1 Thanos Sidecar

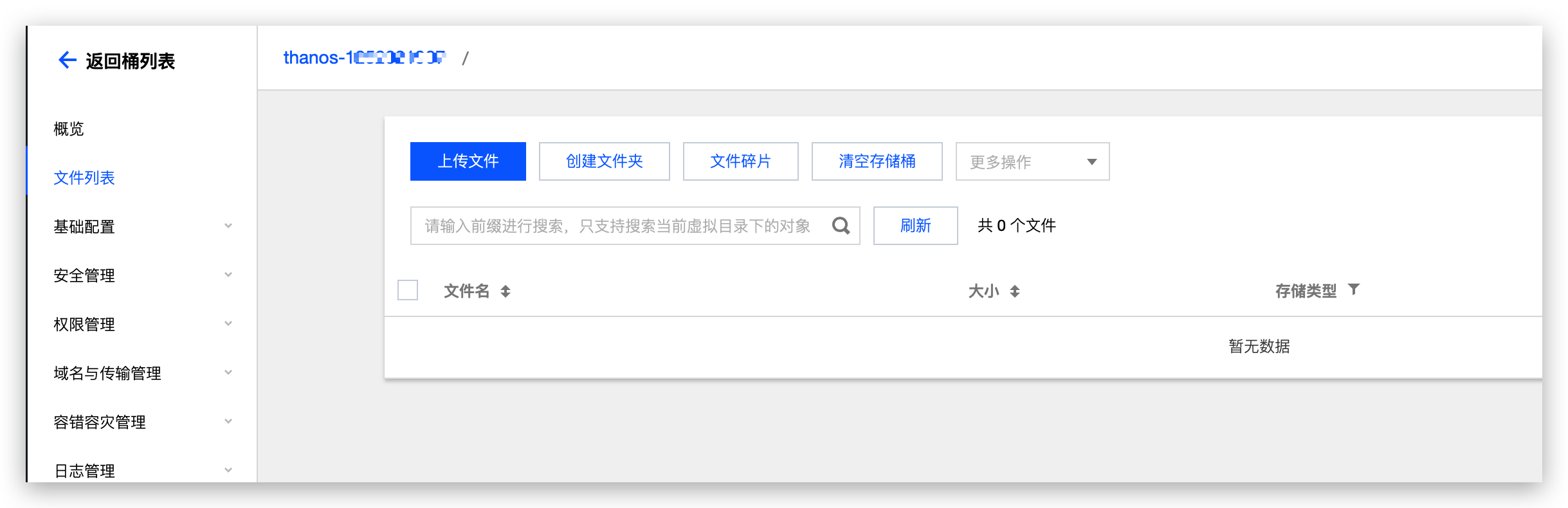

准备对象存储配置

如果我们要使用对象存储来长期保存数据,那么就要准备下对象存储的配置信息 (thanos-objectstorage-secret.yaml),比如使用腾讯云 COS 来存储:

apiVersion: v1

kind: Secret

metadata:

name: thanos-objectstorage

namespace: monitoring

type: Opaque

stringData:

objectstorage.yaml: |

type: COS

config:

bucket: "thanos"

region: "ap-shanghai"

app_id: "125xxxx907"

secret_key: ""

secret_id: ""[root@VM-1-19-centos thanos]# kubectl apply -f thanos-objectstorage-secret.yaml

secret/thanos-objectstorage created对象存储的配置准备好过后,接下来我们就可以在 Prometheus CRD 中添加对应的 Thanos 配置了,完整的资源对象如下所示:

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.36.2

name: k8s

namespace: monitoring

spec:

alerting:

alertmanagers:

- apiVersion: v2

name: alertmanager-main

namespace: monitoring

port: web

enableFeatures: []

...

serviceMonitorSelector: {}

version: 2.36.2

## 增加如下配置

thanos:

image: thanosio/thanos:v0.18.0

objectStorageConfig:

key: objectstorage.yaml

name: thanos-objectstorage更新完成后我们再次查看更新后的 Prometheus Pod,可以发现已经变成了 3 个容器了:

[root@VM-1-19-centos thanos-server]# kubectl get po -n monitoring | grep prometheus-k8s

prometheus-k8s-0 3/3 Running 0 40s

prometheus-k8s-1 3/3 Running 0 58s把生成的资源对象导出来查看验证下:

- args:

- sidecar

- --prometheus.url=http://localhost:9090/

- --grpc-address=[$(POD_IP)]:10901

- --http-address=[$(POD_IP)]:10902

- --objstore.config=$(OBJSTORE_CONFIG)

- --tsdb.path=/prometheus

env:

- name: POD_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

- name: OBJSTORE_CONFIG

valueFrom:

secretKeyRef:

key: thanos.yaml

name: thanos-objectstorage

image: thanosio/thanos:v0.18.0

imagePullPolicy: IfNotPresent

name: thanos-sidecar

ports:

- containerPort: 10902

name: http

protocol: TCP

- containerPort: 10901

name: grpc

protocol: TCP可以看到在原来的基础上新增了一个 sidecar 容器,正常每 2 个小时会上传一次数据,查看 sidecar 可以查看到相关日志。

[root@VM-1-19-centos thanos-server]# kubectl logs -n monitoring prometheus-k8s-0 -c thanos-sidecar --tail 100

level=info ts=2022-07-07T13:55:43.555285552Z caller=shipper.go:334 msg="upload new block" id=01G7CF3AGXPFQ1V1WAJAH4RP67

level=info ts=2022-07-07T15:00:13.516698636Z caller=shipper.go:334 msg="upload new block" id=01G7CJSMNY0KF34XHQCH7XKHYT

level=info ts=2022-07-07T17:00:13.537501204Z caller=shipper.go:334 msg="upload new block" id=01G7CSNBXX7BV2HG0G3D6CRCFQ

level=info ts=2022-07-07T19:00:13.537711108Z caller=shipper.go:334 msg="upload new block" id=01G7D0H35Y9GHFATCP5BB54174

level=info ts=2022-07-07T21:00:13.513840463Z caller=shipper.go:334 msg="upload new block" id=01G7D7CTDZA4Y9J31ZK1X1S3K9

level=info ts=2022-07-07T23:00:13.530472788Z caller=shipper.go:334 msg="upload new block" id=01G7DE8HNZ4S97F12FXQBSJT8M

level=info ts=2022-07-08T01:00:13.529593561Z caller=shipper.go:334 msg="upload new block" id=01G7DN48XY16K0MTT61P8JDSXF

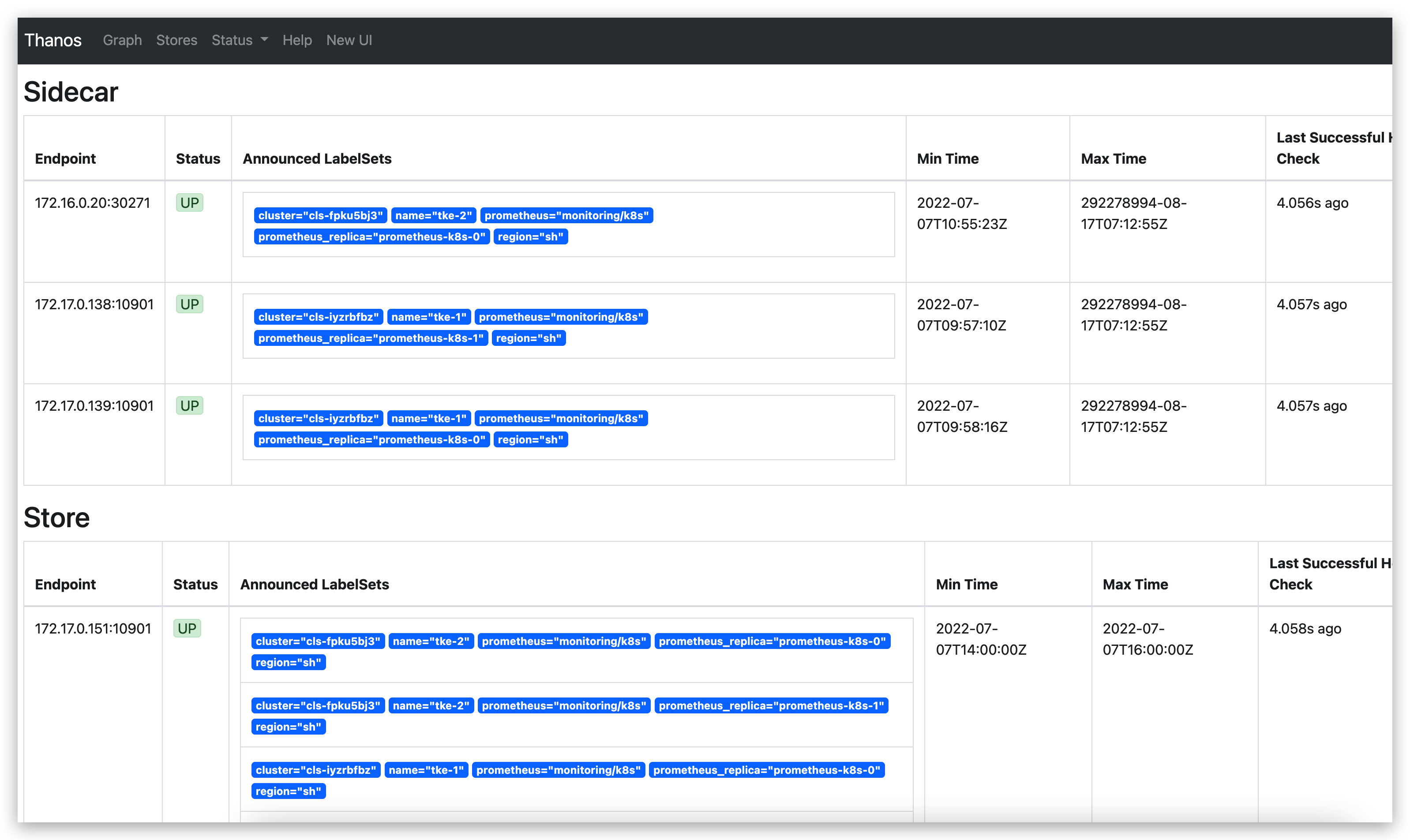

2.4.2 Thanos Querier

Thanos Querier 组件提供了从所有 prometheus 实例中一次性检索指标的能力。它与原 prometheus 的 PromQL 和 HTTP API 是完全兼容的,所以同样可以和 Grafana 一起使用。

因为 Querier 组件是要和 Sidecar 以及 Store 组件进行对接的,所以在 Querier 组件的方向参数中需要配置上上面我们启动的 Thanos Sidecar,同样我们可以通过对应的 Headless Service 来进行发现,自动创建的 Service 名为 prometheus-operated(可以通过 Statefulset 查看):

[root@VM-1-19-centos thanos-server]# kubectl describe svc -n monitoring prometheus-operated

Name: prometheus-operated

Namespace: monitoring

Labels: operated-prometheus=true

Annotations: <none>

Selector: app.kubernetes.io/name=prometheus

Type: ClusterIP

IP Families: <none>

IP: None

IPs: None

Port: web 9090/TCP

TargetPort: web/TCP

Endpoints: 172.17.0.14:9090,172.17.0.86:9090

Port: grpc 10901/TCP

TargetPort: grpc/TCP

Endpoints: 172.17.0.14:10901,172.17.0.86:10901

Session Affinity: None

Events: <none>Thanos Querier 组件完整的资源清单如下所示,需要注意的是 Prometheus Operator 部署的 prometheus 实例多副本的 external_labels 标签为 prometheus_replica

apiVersion: apps/v1

kind: Deployment

metadata:

name: thanos-querier

namespace: monitoring

labels:

app: thanos-querier

spec:

selector:

matchLabels:

app: thanos-querier

template:

metadata:

labels:

app: thanos-querier

spec:

containers:

- name: thanos

image: thanosio/thanos:v0.18.0

args:

- query

- --log.level=debug

- --query.replica-label=prometheus_replica

- --store=dnssrv+prometheus-operated:10901

ports:

- name: http

containerPort: 10902

- name: grpc

containerPort: 10901

resources:

limits:

memory: "2Gi"

cpu: "1"

livenessProbe:

httpGet:

path: /-/healthy

port: http

initialDelaySeconds: 10

readinessProbe:

httpGet:

path: /-/healthy

port: http

initialDelaySeconds: 15

---

apiVersion: v1

kind: Service

metadata:

name: thanos-querier

namespace: monitoring

labels:

app: thanos-querier

spec:

ports:

- port: 9090

targetPort: http

name: http

type: NodePort

selector:

app: thanos-querier- 因为 Query 是无状态的,使用 Deployment 部署,也不需要 headless service,直接创建普通的 service。

- 使用软反亲和,尽量不让 Query 调度到同一节点。

- 部署多个副本,实现 Query 的高可用。

-

--query.auto-downsampling查询时自动降采样,提升查询效率。 -

--query.replica-label指定我们刚刚给 Prometheus 配置的prometheus_replica这个 external label,Query 向 Sidecar 拉取 Prometheus 数据时会识别这个 label 并自动去重,这样即使挂掉一个副本,只要至少有一个副本正常也不会影响查询结果,也就是可以实现 Prometheus 的高可用。同理,再指定一个rule_replica用于给 Ruler 做高可用。 -

--store指定实现了 Store API 的地址(Sidecar, Ruler, Store Gateway, Receiver),通常不建议写静态地址,而是使用服务发现机制自动发现 Store API 地址。如果是部署在同一个集群,可以用 DNS SRV 记录来做服务发现,比如

dnssrv+prometheus-operated:10901,也就是我们刚刚为包含 Sidecar 的 Prometheus 创建的 service,并且指定了名为 grpc 的 tcp 端口,同理,其它组件也可以按照这样加到--store参数里。如果是其它有些组件部署在集群外,无法通过集群 dns 解析 DNS SRV 记录,可以使用配置文件来做服务发现,也就是指定

--store.sd-files参数,将其它 Store API 地址写在配置文件里 (挂载 ConfigMap),需要增加地址时直接更新 ConfigMap (不需要重启 Query)。

2.4.3 Thanos Store

接着需要部署 Thanos Store 组件,该组件和可以 Querier 组件一起协作从指定对象存储的 bucket 中检索历史指标数据,所以自然在部署的时候我们需要指定对象存储的配置,Store 组件配置完成后还需要加入到 Querier 组件里面去:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: thanos-store

namespace: monitoring

labels:

app: thanos-store

spec:

selector:

matchLabels:

app: thanos-store

serviceName: thanos-store

template:

metadata:

labels:

app: thanos-store

spec:

containers:

- name: thanos

image: thanosio/thanos:v0.18.0

args:

- "store"

- "--log.level=debug"

- "--data-dir=/data"

- "--objstore.config-file=/etc/secret/objectstorage.yaml"

- "--index-cache-size=500MB"

- "--chunk-pool-size=500MB"

ports:

- name: http

containerPort: 10902

- name: grpc

containerPort: 10901

livenessProbe:

httpGet:

port: 10902

path: /-/healthy

initialDelaySeconds: 10

readinessProbe:

httpGet:

port: 10902

path: /-/ready

initialDelaySeconds: 15

volumeMounts:

- name: object-storage-config

mountPath: /etc/secret

readOnly: false

volumes:

- name: object-storage-config

secret:

secretName: thanos-objectstorage

---

apiVersion: v1

kind: Service

metadata:

name: thanos-store

namespace: monitoring

spec:

type: ClusterIP

clusterIP: None

ports:

- name: grpc

port: 10901

targetPort: grpc

selector:

app: thanos-store[root@VM-1-19-centos thanos]# kubectl apply -f thanos-store.yaml

statefulset.apps/thanos-store created

service/thanos-store created部署完成后为了让 Querier 组件能够发现 Store 组件,我们还需要在 Querier 组件中增加 Store 组件的发现:

containers:

- name: thanos

image: thanosio/thanos:v0.18.0

args:

- query

- --log.level=debug

- --query.replica-label=prometheus_replica

# Discover local store APIs using DNS SRV.

- --store=dnssrv+prometheus-operated:10901

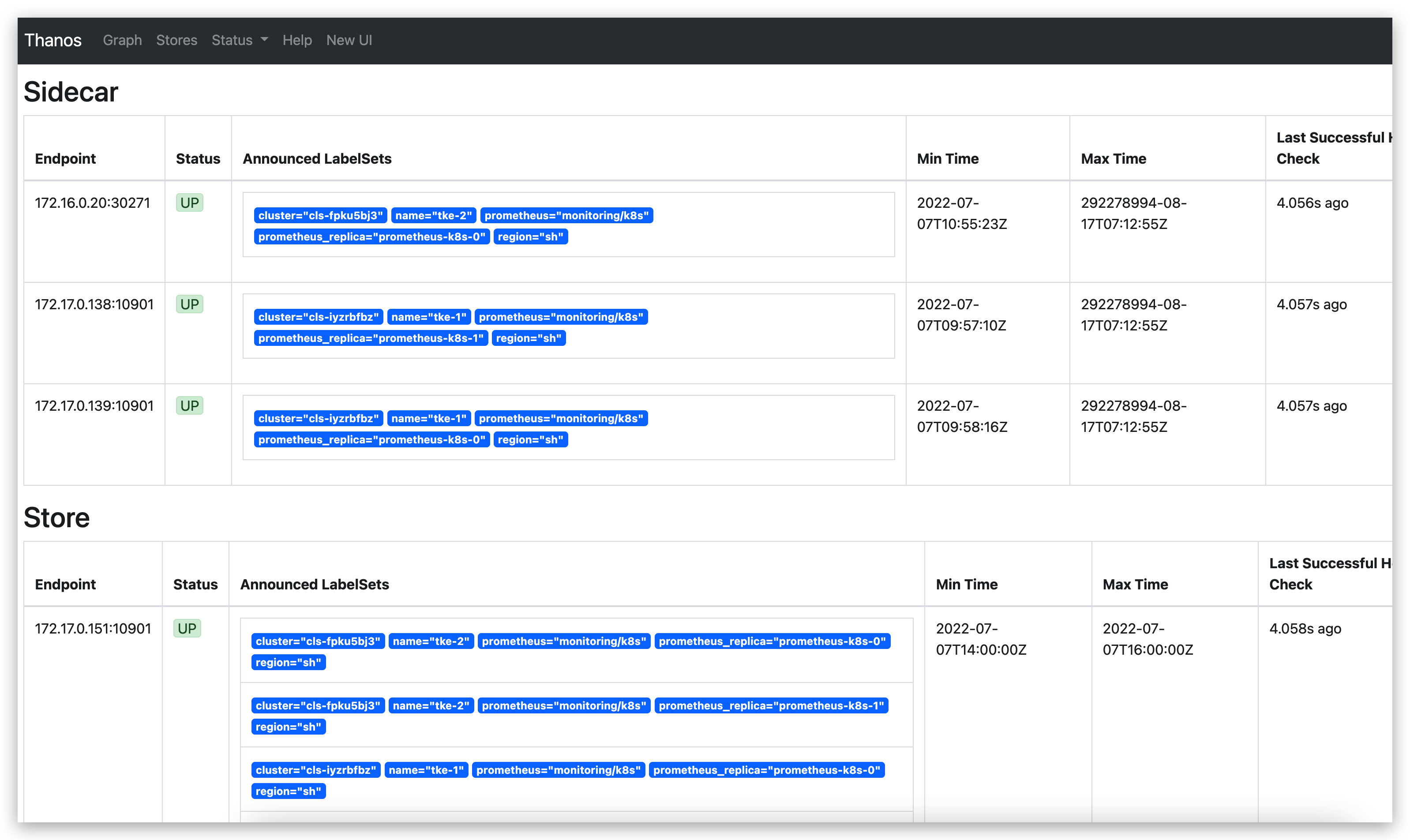

- --store=dnssrv+thanos-store:10901更新后再次前往 Querier 组件的页面查看发现的 Store 组件正常会多一个 Thanos Store 的组件

2.4.4 Thanos Compactor

Thanos Compactor 组件可以对我们收集的历史数据进行下采样,可以减少文件的大小。部署方式和之前没什么太大的区别,主要也就是对接对象存储。

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: thanos-compactor

namespace: monitoring

labels:

app: thanos-compactor

spec:

selector:

matchLabels:

app: thanos-compactor

serviceName: thanos-compactor

template:

metadata:

labels:

app: thanos-compactor

spec:

containers:

- name: thanos

image: thanosio/thanos:v0.18.0

args:

- "compact"

- "--log.level=debug"

- "--data-dir=/data"

- "--objstore.config-file=/etc/secret/objectstorage.yaml"

- "--wait"

ports:

- name: http

containerPort: 10902

livenessProbe:

httpGet:

port: 10902

path: /-/healthy

initialDelaySeconds: 10

readinessProbe:

httpGet:

port: 10902

path: /-/ready

initialDelaySeconds: 15

volumeMounts:

- name: object-storage-config

mountPath: /etc/secret

readOnly: false

volumes:

- name: object-storage-config

secret:

secretName: thanos-objectstorage3 新增集群B

新增集群我们只需要部署Prometheus + Thanos SideCar + Thanos Complator即可

[root@VM-0-20-centos thanos-server]# cd thanos-server/thanos-client

[root@VM-0-20-centos thanos-server]# kubectl create -f setup/

customresourcedefinition.apiextensions.k8s.io/alertmanagerconfigs.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/alertmanagers.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/podmonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/probes.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheuses.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheusrules.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/servicemonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/thanosrulers.monitoring.coreos.com created

namespace/monitoring created3.1 部署CRD

对象存储可以和集群 A 使用一个

apiVersion: v1

kind: Secret

metadata:

name: thanos-objectstorage

namespace: monitoring

type: Opaque

stringData:

objectstorage.yaml: |

type: COS

config:

bucket: "thanos"

region: "ap-shanghai"

app_id: "125xxxx907"

secret_key: ""

secret_id: ""[root@VM-0-20-centos thanos]# kubectl apply -f thanos-objectstorage-secret.yaml

secret/thanos-objectstorage created 3.2 部署Thanos SideCar + Prometheus

[root@VM-0-20-centos thanos-server]# kubectl apply -f prometheus-operator/apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.36.2

name: k8s

namespace: monitoring

spec:

alerting:

alertmanagers:

- apiVersion: v2

name: alertmanager-main

namespace: monitoring

port: web

enableFeatures: []

...

serviceMonitorSelector: {}

version: 2.36.2

## 增加如下配置

thanos:

image: thanosio/thanos:v0.18.0

objectStorageConfig:

key: objectstorage.yaml

name: thanos-objectstorage部署后如下:

[root@VM-0-20-centos thanos]# kubectl get po -n monitoring

NAME READY STATUS RESTARTS AGE

kube-state-metrics-fb84c8d6c-m4mfc 3/3 Running 0 5h49m

node-exporter-mf8xr 2/2 Running 0 5h51m

node-exporter-x79fq 2/2 Running 0 5h51m

prometheus-k8s-0 3/3 Running 0 5h45m

prometheus-k8s-1 3/3 Running 0 5h45m

prometheus-operator-bc6bd749c-6gzbr 2/2 Running 0 5h51m3.3 部署 Thanos Compactor

[root@VM-0-20-centos thanos]# kubectl apply -f thanos-compactor.yaml最终如下:

[root@VM-0-20-centos thanos]# kubectl get po -n monitoring

NAME READY STATUS RESTARTS AGE

kube-state-metrics-fb84c8d6c-m4mfc 3/3 Running 0 5h52m

node-exporter-mf8xr 2/2 Running 0 5h54m

node-exporter-x79fq 2/2 Running 0 5h54m

prometheus-k8s-0 3/3 Running 0 5h47m

prometheus-k8s-1 3/3 Running 0 5h48m

prometheus-operator-bc6bd749c-6gzbr 2/2 Running 0 5h54m

thanos-compactor-0 1/1 Running 0 5h42m3.4 集群链接

编辑集群A中的 thanos-querier.yaml

这里可以使用配置文件来做服务发现,也就是指定

--store.sd-files参数,将其它 Store API 地址写在配置文件里 (挂载 ConfigMap),需要增加地址时直接更新 ConfigMap (不需要重启 Query)

args:

- query

- --log.level=debug

- --query.replica-label=prometheus_replica

- --store=dnssrv+prometheus-operated:10901

- --store=dnssrv+thanos-store:10901

- --store=172.16.0.20:30271

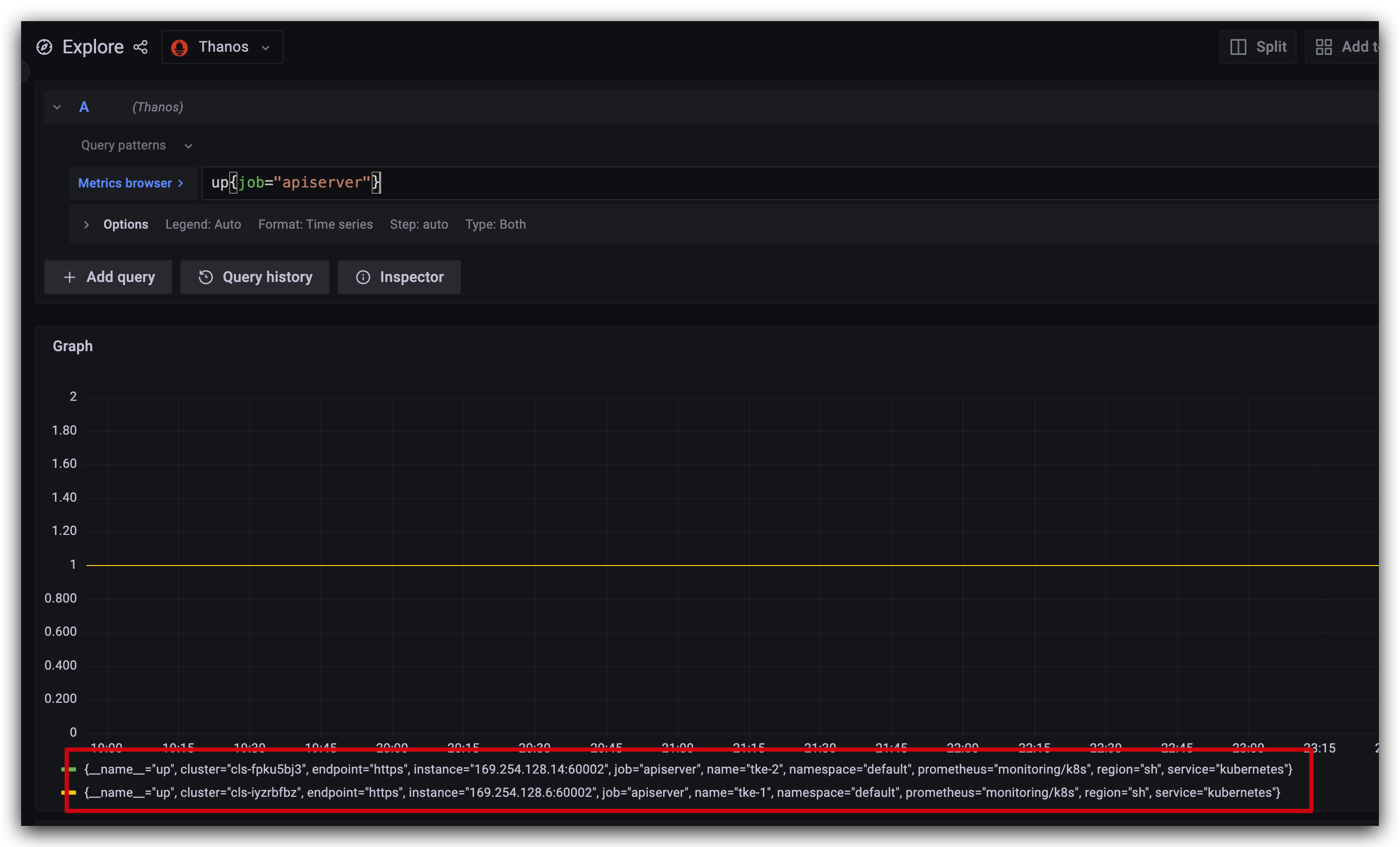

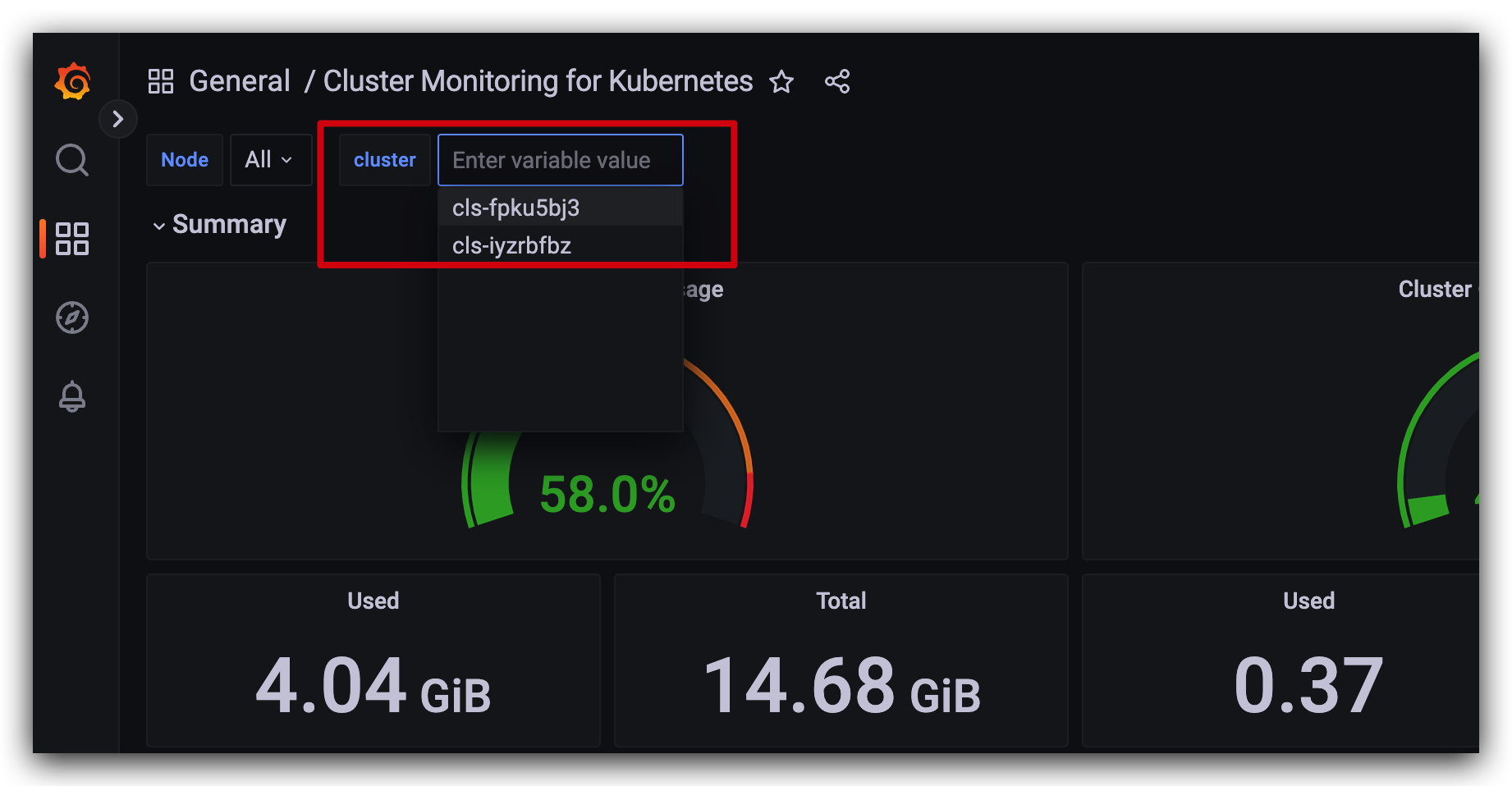

4 测试多集群指标采集

3 其他问题

3.1 自定义 Prometheus-operator 配置文件

自带的 prometheus.yaml 是只读的,没法修改,那怎么搞呢?

1、把 blackbox 的prometheus配置写入一个文件 prometheus-additional.yaml,内容如下

- job_name: blackbox-exporter

honor_timestamps: true

scrape_interval: 30s

scrape_timeout: 10s

metrics_path: /metrics

scheme: https

authorization:

type: Bearer

credentials_file: /var/run/secrets/kubernetes.io/serviceaccount/token

tls_config:

insecure_skip_verify: true

follow_redirects: true

enable_http2: true

relabel_configs:

- source_labels: [job]

separator: ;

regex: (.*)

target_label: __tmp_prometheus_job_name

replacement: $1

action: replace

- source_labels: [__meta_kubernetes_service_label_app_kubernetes_io_component, __meta_kubernetes_service_labelpresent_app_kubernetes_io_component]

separator: ;

regex: (exporter);true

replacement: $1

action: keep

- source_labels: [__meta_kubernetes_service_label_app_kubernetes_io_name, __meta_kubernetes_service_labelpresent_app_kubernetes_io_name]

separator: ;

regex: (blackbox-exporter);true

replacement: $1

action: keep

- source_labels: [__meta_kubernetes_service_label_app_kubernetes_io_part_of, __meta_kubernetes_service_labelpresent_app_kubernetes_io_part_of]

separator: ;

regex: (kube-prometheus);true

replacement: $1

action: keep

- source_labels: [__meta_kubernetes_endpoint_port_name]

separator: ;

regex: https

replacement: $1

action: keep

- source_labels: [__meta_kubernetes_endpoint_address_target_kind, __meta_kubernetes_endpoint_address_target_name]

separator: ;

regex: Node;(.*)

target_label: node

replacement: ${1}

action: replace

- source_labels: [__meta_kubernetes_endpoint_address_target_kind, __meta_kubernetes_endpoint_address_target_name]

separator: ;

regex: Pod;(.*)

target_label: pod

replacement: ${1}

action: replace

- source_labels: [__meta_kubernetes_namespace]

separator: ;

regex: (.*)

target_label: namespace

replacement: $1

action: replace

- source_labels: [__meta_kubernetes_service_name]

separator: ;

regex: (.*)

target_label: service

replacement: $1

action: replace

- source_labels: [__meta_kubernetes_pod_name]

separator: ;

regex: (.*)

target_label: pod

replacement: $1

action: replace

- source_labels: [__meta_kubernetes_pod_container_name]

separator: ;

regex: (.*)

target_label: container

replacement: $1

action: replace

- source_labels: [__meta_kubernetes_service_name]

separator: ;

regex: (.*)

target_label: job

replacement: ${1}

action: replace

- separator: ;

regex: (.*)

target_label: endpoint

replacement: https

action: replace

- source_labels: [__address__]

separator: ;

regex: (.*)

modulus: 1

target_label: __tmp_hash

replacement: $1

action: hashmod

- source_labels: [__tmp_hash]

separator: ;

regex: "0"

replacement: $1

action: keep

kubernetes_sd_configs:

- role: endpoints

kubeconfig_file: ""

follow_redirects: true

enable_http2: true

namespaces:

own_namespace: false

names:

- monitoring2、把这个文件创建为一个secret对象

[root@VM-1-19-centos ~]# kubectl create secret generic additional-configs --from-file=prometheus-additional.yaml -n monitoring

secret/additional-configs created3、进入 Prometheus Operator 源码的kube-prometheus/manifests/目录(本文中是 thanos-server/prometheus-operator),修改prometheus-prometheus.yaml这个文件,添加additionalScrapeConfigs配置

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

additionalScrapeConfigs:

name: additional-configs

key: prometheus-additional.yaml

serviceAccountName: prometheus-k8s

serviceMonitorNamespaceSelector: {}

serviceMonitorSelector: {}[root@VM-1-19-centos prometheus-operator]# kubectl apply -f prometheus-prometheus.yaml

prometheus.monitoring.coreos.com/k8s configured4、在浏览器上查看配置文件已生效,采集正常

3.2 网站拨测

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: blackbox-cm-website

namespace: monitoring

labels:

app.kubernetes.io/name: blackbox-exporter

spec:

endpoints:

- interval: 20s

params:

module:

- http_2xx

target:

- https://www.baidu.com

path: /probe

port: http

scheme: http

scrapeTimeout: 5s

metricRelabelings:

- replacement: https://www.baidu.com

targetLabel: instance

- replacement: https://www.baidu.com

targetLabel: target

- replacement: website

targetLabel: module

- replacement: service

targetLabel: targetType

- replacement: sh

targetLabel: targetRegion

- replacement: http_2xx

targetLabel: checkType

- replacement: blackboxWatch

targetLabel: monitoringFrom

- replacement: prometheus-blackbox-exporter

targetLabel: pod

- replacement: sh

targetLabel: region

- interval: 20s

params:

module:

- http_2xx

target:

- https://www.qq.com

path: /probe

port: http

scheme: http

scrapeTimeout: 5s

metricRelabelings:

- replacement: https://www.qq.com

targetLabel: instance

- replacement: https://www.qq.com

targetLabel: target

- replacement: website

targetLabel: module

- replacement: service

targetLabel: targetType

- replacement: sh

targetLabel: targetRegion

- replacement: http_2xx

targetLabel: checkType

- replacement: blackboxWatch

targetLabel: monitoringFrom

- replacement: prometheus-blackbox-exporter

targetLabel: pod

- replacement: sh

targetLabel: region

selector:

matchLabels:

app.kubernetes.io/name: blackbox-exporter[root@VM-1-19-centos ~]# kubectl apply -f blackbox-website.yaml

servicemonitor.monitoring.coreos.com/blackbpx-cm-website created